Real estate teams are operating in a data environment that is bigger, faster, and more fragmented than ever. Listings go live and disappear in hours, price cuts happen quietly, and the portals that matter most in each country are rarely the same global “top 5.” If you are a marketplace, an agency, or an investor, you do not just need some property data — you need fresh, structured, and reliable datasets from the platforms that shape your market.

That is exactly what ScrapeIt’s Real Estate Scraping Services are built for: fully-managed extraction and delivery of listings, prices, and market signals from the sites you choose, on the schedule you choose.

Below you will find the top 7 real estate portals worth scraping in 2026, plus two less-obvious “hidden” sources that high-performing portals and investors use to gain an edge. For each site, we cover what makes it valuable, which data layers matter most, and the practical considerations you should plan for when collecting data at scale.

Scraping real estate data is not a technical hobby anymore — it is a competitive necessity for three core audiences:

If that matches your goals, start from the real estate sites ScrapeIt supports. It includes most major global portals plus regional leaders, and ScrapeIt can scrape any additional site you need on request.

Across most portals, teams typically collect:

The real value appears when you collect these fields consistently over time, enabling price-change alerts, inventory velocity dashboards, and investment models.

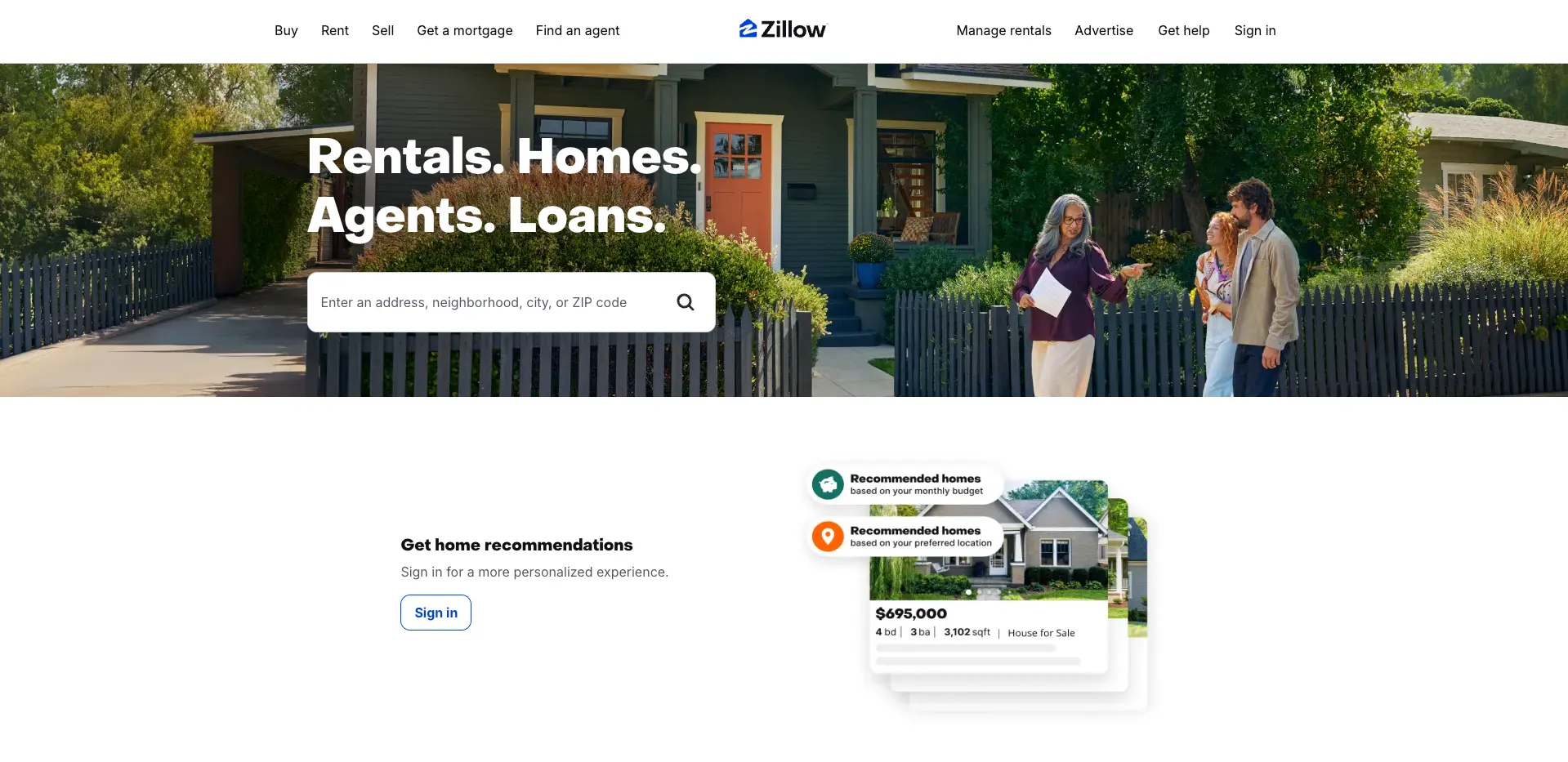

What it is: Zillow is the largest U.S. residential marketplace, covering sales, rentals, and rich historical context for each property. ScrapeIt provides dedicated Zillow Data Scraping for teams that need U.S. inventory at scale.

Why scrape it:

High-value data layers: listing details, price history, status changes, agent info, neighborhood or estimate-style signals (where public).

Scraping considerations: Zillow pages are heavily JavaScript-driven and frequently updated, with strong anti-bot controls. Stable extraction typically relies on capturing embedded structured data and monitoring layout/API changes over time.

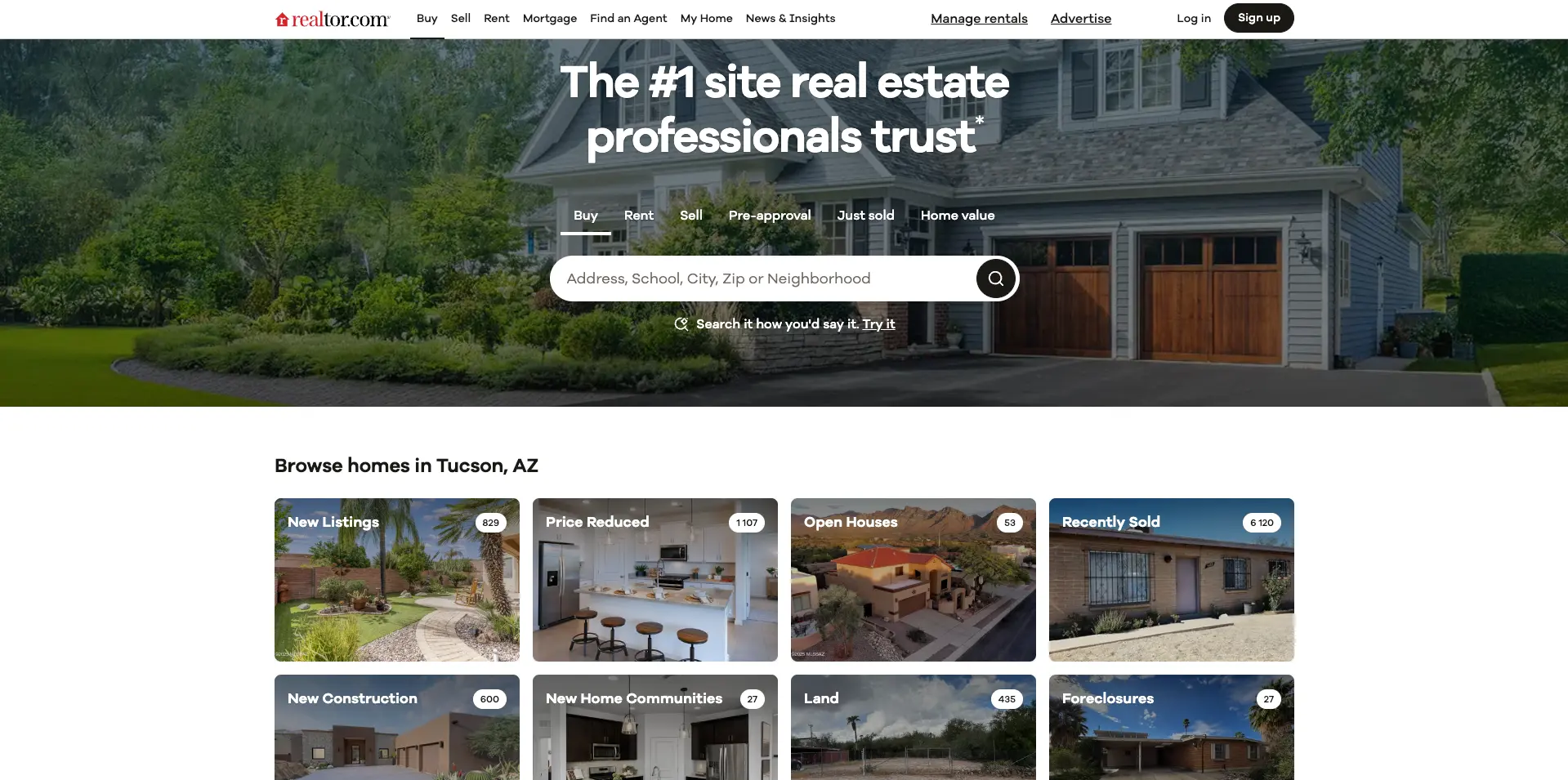

What it is: Realtor.com is a highly trusted, MLS-connected U.S. portal known for data reliability. ScrapeIt offers a specialized Realtor.com Scraper.

Why scrape it:

High-value data layers: MLS-grade status, listing timelines, property specs, broker and office metadata.

Scraping considerations: Most listing pages are dynamically assembled, and search/filtering logic is frequently API-based. You should plan for pagination variability and consistent rate-limit handling; public contact fields require careful compliance checks.

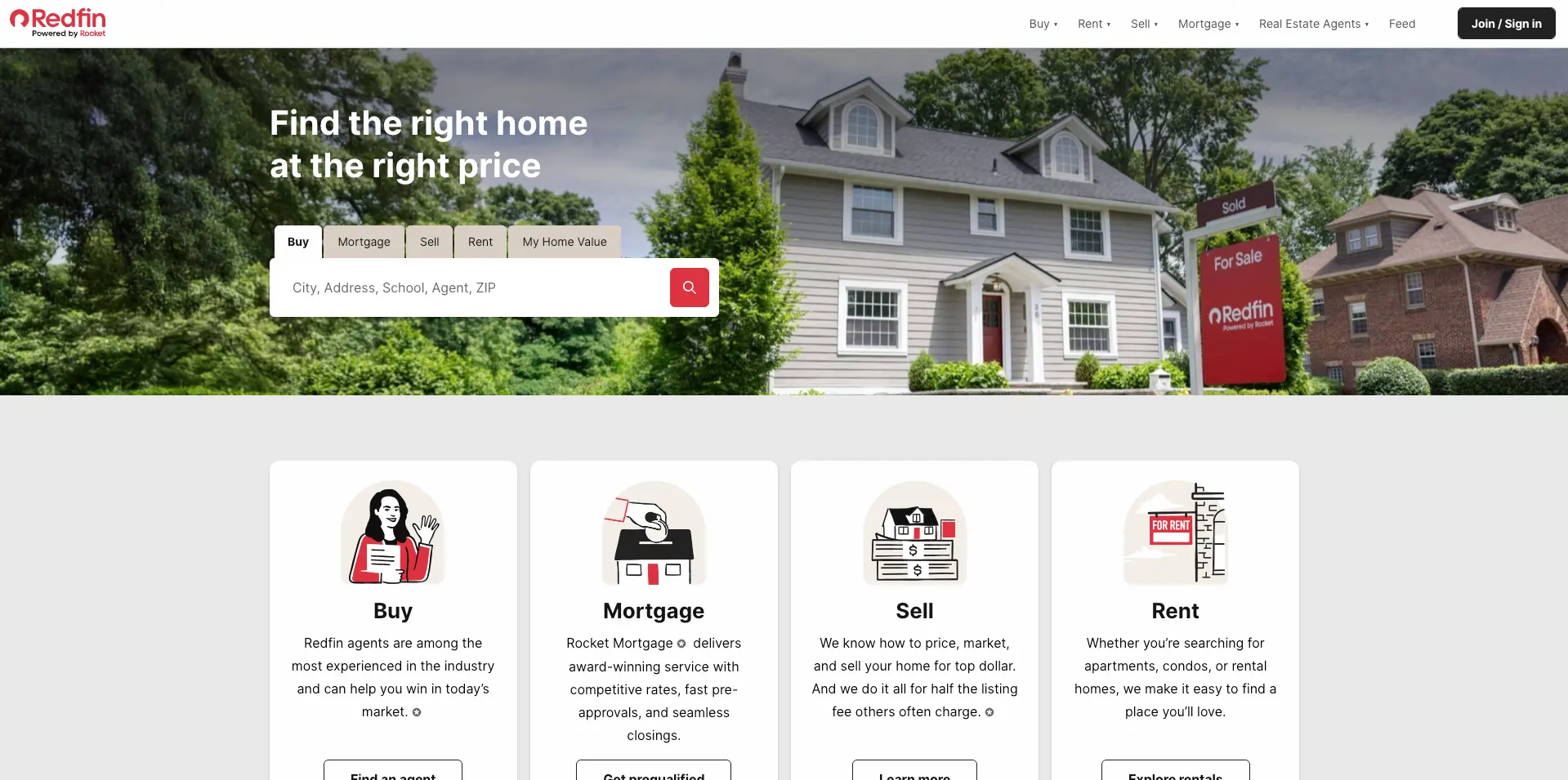

What it is: Redfin is a major North American portal combining listings with strong map UX and fast refresh cycles. ScrapeIt scrapes Redfin among the portals it supports for ongoing extraction.

Why scrape it:

High-value data layers: listing specs, price-change history, time-on-market signals, agent/office fields.

Scraping considerations: Listing data is often loaded through map-driven background requests, so the structure can vary depending on filters and viewport. Normalization across regions is essential if you are building a unified feed.

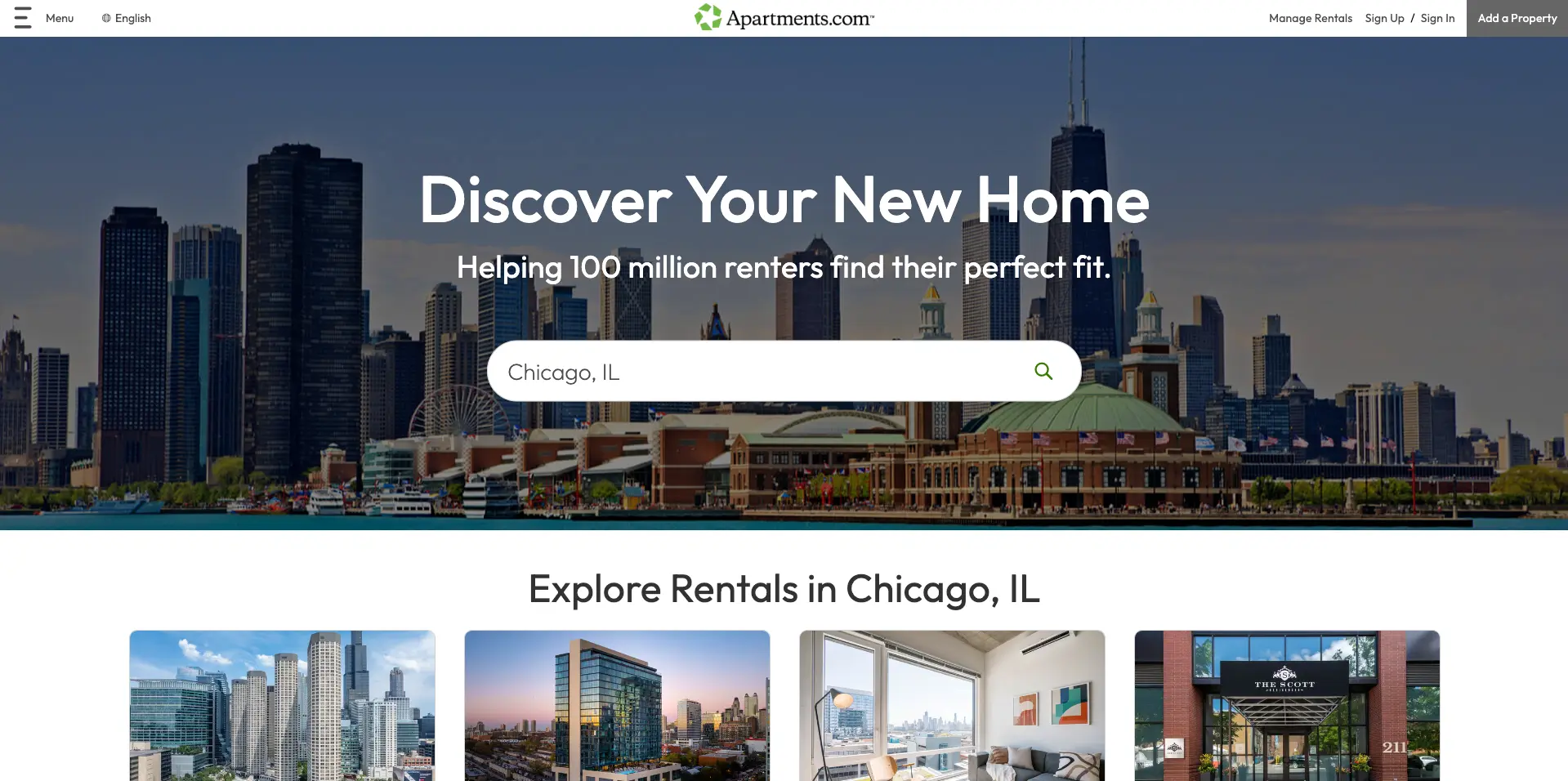

What it is: Apartments.com is the largest U.S. rental marketplace, anchored on multi-unit buildings, communities, and verified landlords. ScrapeIt supports Apartments.com scraping for rental intelligence.

Why scrape it:

High-value data layers: rent by unit type, floorplans, lease terms, amenities, pet/parking rules, availability and move-in windows.

Scraping considerations: Buildings often contain nested unit lists. To avoid misleading analysis, you need clean building-level IDs, unit-level deduplication, and a refresh cadence that matches market churn.

What it is: Rightmove is the UK’s #1 portal for sale and rental property. ScrapeIt provides a managed Rightmove Data Scraper Service.

Why scrape it:

High-value data layers: property specs, listing status changes (available / STC / under offer), agent profiles, postcode and radius metadata.

Scraping considerations: Strong bot protection and evolving layouts are normal. Rightmove also enforces practical limits on very deep pagination, so extraction strategies must be tailored to geographic search logic.>

What it is: ImmoScout24 is Germany’s leading real estate marketplace. ScrapeIt runs a dedicated ImmoScout24 Data Scraping service.

Why scrape it:

High-value data layers: price, location hierarchy, room counts, building condition, and energy efficiency / certificate fields that are especially relevant in Germany.

Scraping considerations: Localization matters: German labels, EU numeric formatting, and energy-class parsing require high-quality normalization. Expect cookie/consent layers and dynamic listing components.

What it is: Hemnet is Sweden’s #1 property portal and a core Nordic data source. ScrapeIt offers a managed Hemnet Data Scraper.

Why scrape it:

High-value data layers: listing specs, price trends, time-on-market behavior, and user-interest indicators (when public).

Scraping considerations: Unit conventions (sqm, SEK) and Scandinavian category structures need standardized mapping. Behavioral/time-series fields are only useful if captured consistently on a fixed cadence.

Mainstream portals are necessary — but not sufficient. These two specialized sources unlock datasets that most competitors do not track.

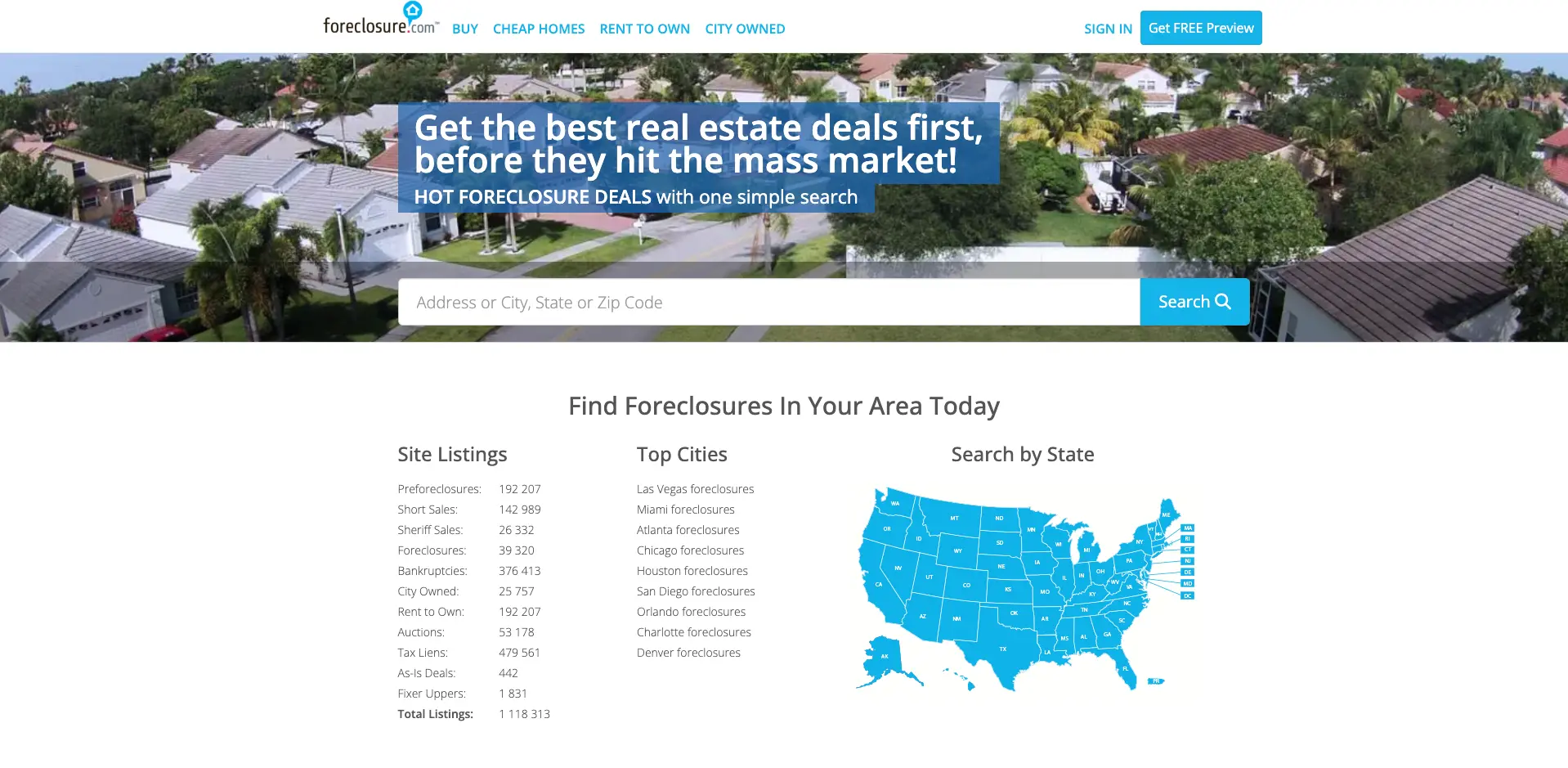

What it is: Foreclosure.com aggregates U.S. foreclosure, pre-foreclosure, and auction listings and updates its database multiple times per day.

Why scrape it:

High-value data layers: foreclosure stage, auction timelines, lender or government source tags (where public), property specs, and price banding.

Scraping considerations: Some deeper detail layers may be account-gated; extraction should focus on public pages and respect platform terms. Listing churn is high, so daily refresh is usually required.

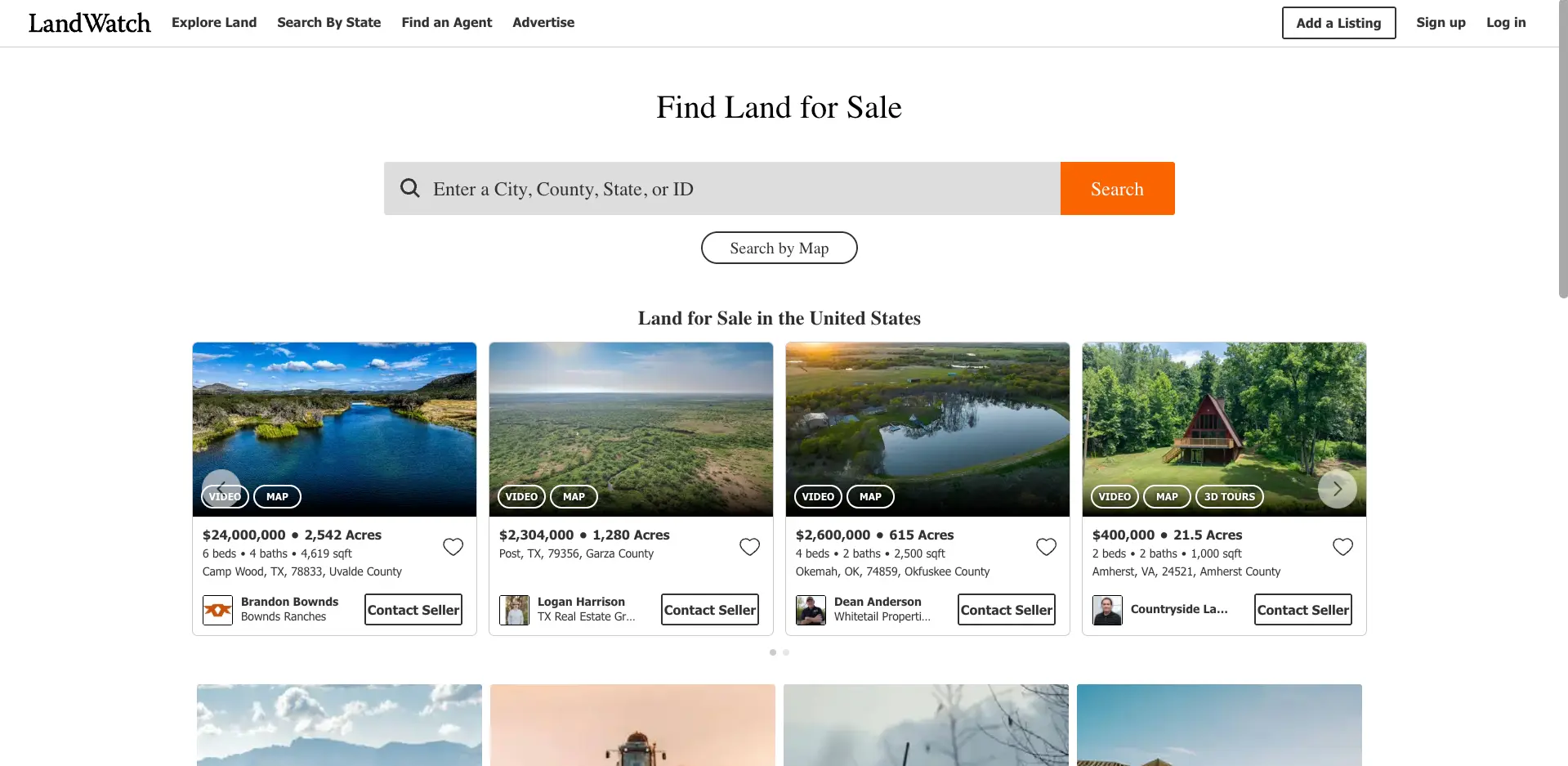

What it is: LandWatch is part of the Land.com Network and a leading marketplace for rural land, farms, ranches, hunting and development parcels.

Why scrape it:

High-value data layers: acreage, parcel type, zoning or usage descriptors (where public), proximity/location tags, and price per acre signals.

Scraping considerations: Expect large photo/map payloads and many sub-types. You should normalize acreage vs sqm/sqft and deduplicate cross-posted parcels across the Land.com network.

Across nearly every market in 2026, teams run into the same operational risks:

If you are building an internal scraper, these issues turn into long-term engineering cost. If your priority is decision-ready data, a managed pipeline is typically more efficient.

ScrapeIt is not a self-serve tool. It is a fully managed data pipeline:

This model is designed for real estate teams that care about accuracy, uptime, and long-term stability, not maintaining scrapers.

Real estate winners in 2026 will be the teams that treat property data like a live market feed, not an occasional research task.

Start with the seven portals above to cover the biggest residential markets worldwide. Then add hidden sources like Foreclosure.com and LandWatch to get earlier signals on distressed inventory and land opportunities others miss.

If you want a clean, automated dataset from any of these sites, open the Real Estate Scraping Services page and tell ScrapeIt what you need — we will deliver the data ready for analysis.

1. Is it legal to scrape real estate portals?

In most jurisdictions, collecting public data is legal if you respect site terms, privacy rules, and avoid misuse of personal data.

2. How often should I refresh listings?

Daily for fast markets and price signals, weekly for trends/benchmarks, one-off for market entry or audits. ScrapeIt supports any cadence.

3. Can ScrapeIt combine multiple portals into one dataset?

Yes. ScrapeIt routinely merges sources and normalizes fields across portals and regions.

4. How do you handle duplicates across portals?

ScrapeIt extracts stable public identifiers, normalizes key fields, applies your de-dup rules (e.g., address+sqm+photos), and delivers a clean master feed.

5. What’s the best way to start if I’m unsure about portals or fields?

Start with 1–2 markets and one core portal, define a minimal field set, request a small ScrapeIt sample, validate, then scale to more sites and frequencies.

Let us take your work with data to the next level and outrank your competitors.

1. Make a request

You tell us which website(s) to scrape, what data to capture, how often to repeat etc.

2. Analysis

An expert analyzes the specs and proposes a lowest cost solution that fits your budget.

3. Work in progress

We configure, deploy and maintain jobs in our cloud to extract data with highest quality. Then we sample the data and send it to you for review.

4. You check the sample

If you are satisfied with the quality of the dataset sample, we finish the data collection and send you the final result.

Scrapeit Sp. z o.o.

10/208 Legionowa str., 15-099, Bialystok, Poland

NIP: 5423457175

REGON: 523384582