E-commerce teams do not just need “some” competitor data anymore. They need a continuous stream of real prices, discounts, stock levels, reviews, and seller behavior from the platforms that actually shape their markets. For a marketplace or online store, that often means scraping several large sites at once and merging them into one clean dataset — instead of opening dozens of tabs every morning.

This guide walks through eight e-commerce platforms that are especially valuable to scrape in 2026 — across the US, Central & Eastern Europe, Southeast Asia, and China. For each, we explain why the platform matters, which data is worth collecting, what makes scraping technically challenging, and who typically uses that data in practice.

All of the platforms below are already covered by ScrapeIt’s Ecommerce Data Scraping Services, and most have dedicated scraper pages under popular sites we scrape. That means you can skip the tooling and focus directly on the dataset your team needs.

Who This Guide Is For And How We Chose the Platforms

Teams that benefit most from e-commerce scraping

Scraped e-commerce datasets are especially useful for:

- Marketplaces – to benchmark seller pricing, define commission tiers, detect assortment gaps, and refine how products are ranked or promoted.

- Online stores and DTC brands – to monitor competitors every day, build dynamic pricing rules, and see where they over- or under-invest in assortment.

- Consulting, analytics, and research firms – to assemble cross-country panels of products, brands, and categories for market reports and strategy projects.

- Data for AI / ML teams – to train recommendation engines, demand models, and pricing algorithms on realistic, large-scale product data.

Selection criteria for the “top 8”

From ScrapeIt’s perspective, not every popular store is equally valuable to scrape. For this list, we focused on platforms that combine:

- Large, active buyer and seller bases in their region.

- Rich, structured product pages (categories, attributes, reviews, badges, logistics).

- Clear value for pricing, assortment, or sourcing decisions.

- Existing ScrapeIt expertise, with ready scrapers and case-proven setups behind them.

The result is a practical, global shortlist: Amazon, eBay, Walmart, AliExpress, Allegro, eMAG, Shopee, and 1688.com.

Quick Overview: Which Site to Scrape For Which Use Case?

Before diving into each website, here is how these eight platforms line up by geography and typical use cases:

- Amazon – global benchmark for pricing, reviews, and assortment depth.

- eBay – auctions, used goods, rare items, and “long tail” inventory.

- Walmart – US omnichannel retail, mass-market pricing signals.

- AliExpress – early indicator of low-cost products and cross-border demand.

- Allegro – core marketplace for Poland and Central Europe.

- eMAG – leading marketplace in Romania and the wider CEE region.

- Shopee – mobile-first demand across Southeast Asia and LATAM.

- 1688.com – Chinese wholesale supply-side data, closer to factories.

All of them are covered under ScrapeIt’s Ecommerce Data Scraping Services, so it is straightforward to combine them into a single, normalized dataset when needed.

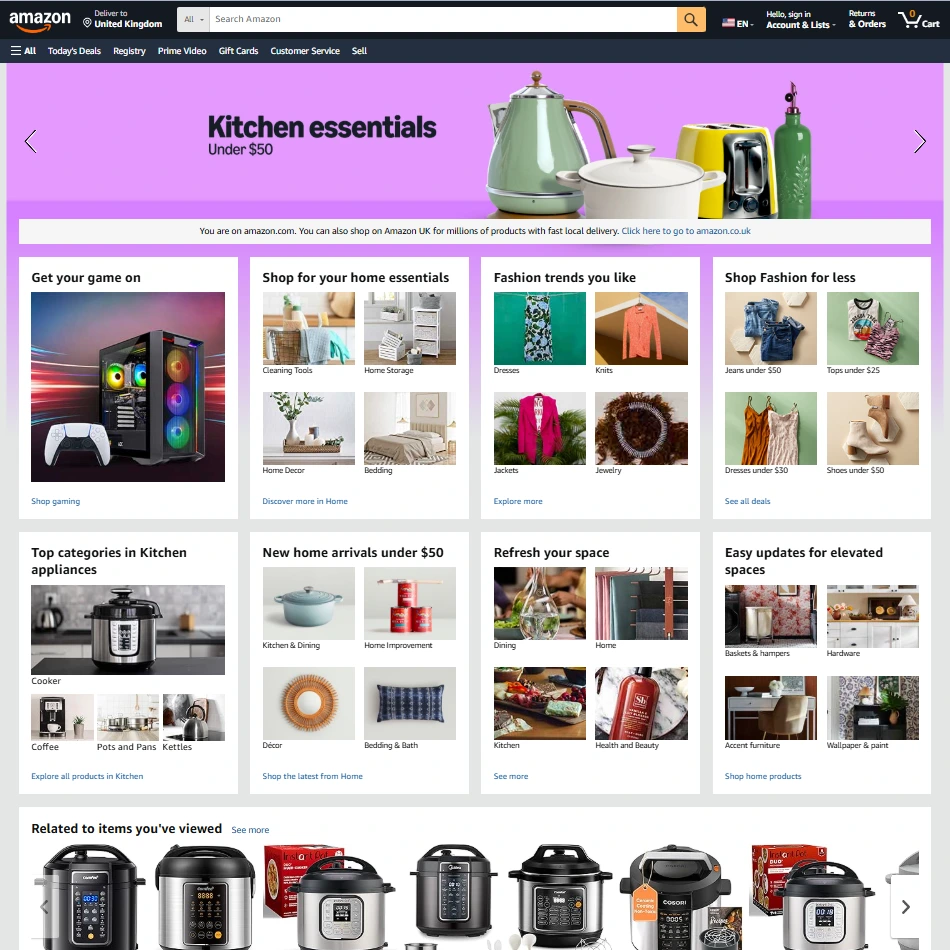

1. Amazon – The Global E-commerce Benchmark

Why Amazon data matters

Amazon is often the first place consumers check for price, availability, and reviews. For many teams, scraping Amazon is the fastest way to see “the global retail pulse” in their category: which products move up or down, how sellers position bundles, and how quickly promotions spread between countries.

ScrapeIt’s dedicated Amazon Scraper is built around that reality. It turns millions of fragmented product pages into consistent product, price, and review datasets that your business can use for dashboards, data science, or automation.

Key data points to scrape from Amazon

- Product titles, brands, categories, and ASINs.

- Current prices, historical prices (via recurring scrapes), discounts, coupons, and deal badges.

- Buy Box ownership and competing offers on the same listing.

- Ratings, review texts, review counts and trends, Q&A content.

- Variations (size, color, bundle, pack size) and their separate pricing.

- Availability, shipping methods, and delivery times (including Prime flags).

Scraping challenges & nuances

- Strong anti-bot protection. Amazon changes layouts and rate limits regularly. This is why many teams offload the technical side to a managed provider such as ScrapeIt’s Amazon data scraping service.

- Many local domains. Pricing, taxes, and availability differ across .com, .de, .co.uk, etc., so careful normalization is important.

- Multiple page types. Search results, category pages, and product pages each have different data structures and anti-bot patterns.

Who typically uses Amazon datasets?

- Marketplaces and online stores – for price parity, assortment planning, and promo tracking.

- Brands and manufacturers – for MAP monitoring, shelf share, and review analysis.

- Consulting and research – for multi-country panels and trend reports.

- AI / ML teams – for training recommendation and pricing models.

If Amazon is your primary benchmark, it usually makes sense to start by scoping a project with Amazon-first scraping and then extend it with 2–3 more marketplaces.

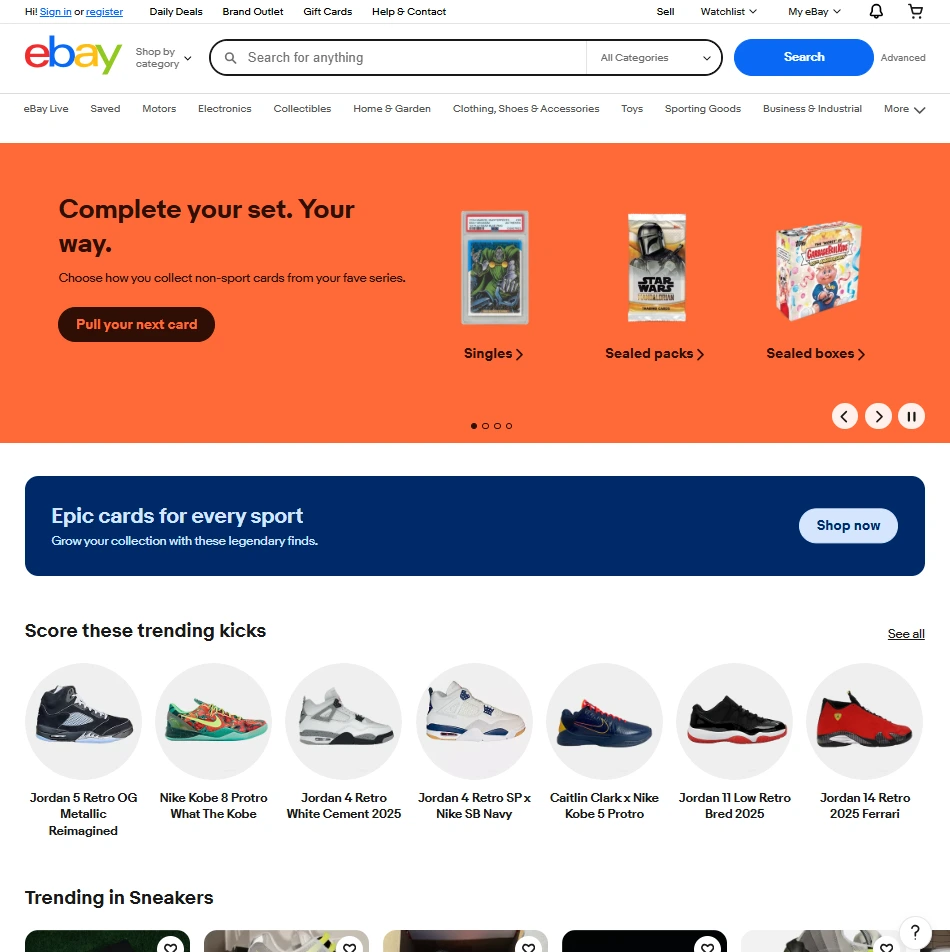

2. eBay – Auctions, Used Goods, and “Hidden” Inventory

Why eBay is different from classic marketplaces

Where Amazon is optimized around standardized product listings, eBay is a live marketplace with auctions, fixed-price offers, refurbished goods, collectibles, and private sellers. Scraping eBay gives you visibility into secondary markets and long-tail demand that rarely shows up elsewhere.

ScrapeIt’s Ebay Data Scraper is built to capture both the structured product information and the time-sensitive signals that define eBay’s “organized chaos.”

Key data points to scrape from eBay

- Listing titles, detailed descriptions, and item specifics (brand, material, size, etc.).

- Current price, “Buy It Now” price, bid history indicators, and shipping costs.

- Product condition (new, used, refurbished) with seller wording.

- Seller profiles, ratings, feedback scores, and location.

- Signals like “items sold,” “watchers,” and time left for auctions.

- Buyer reviews, ratings, and review timelines.

Scraping challenges & nuances

- Auction dynamics. For auctions, timing matters: price and interest can change minute by minute, so scheduling scrapes correctly is critical.

- Mixed data quality. Private sellers may not fill in all fields; you need robust parsing and post-processing to standardize attributes.

- Multiple categories and “Motors”. Scraping eBay Motors and niche categories typically requires custom filters, which ScrapeIt can configure in its custom eBay scraper setups.

Who uses eBay scraping in practice?

- Resellers and refurbishers – to spot undervalued items and arbitrage opportunities.

- Brands – to monitor grey-market activity and second-hand price points.

- Analysts – to understand how product life cycles play out after retail.

If you care about used goods, collectibles, or resale pricing, combining eBay data with Amazon or regional marketplaces gives a much fuller picture of demand over time.

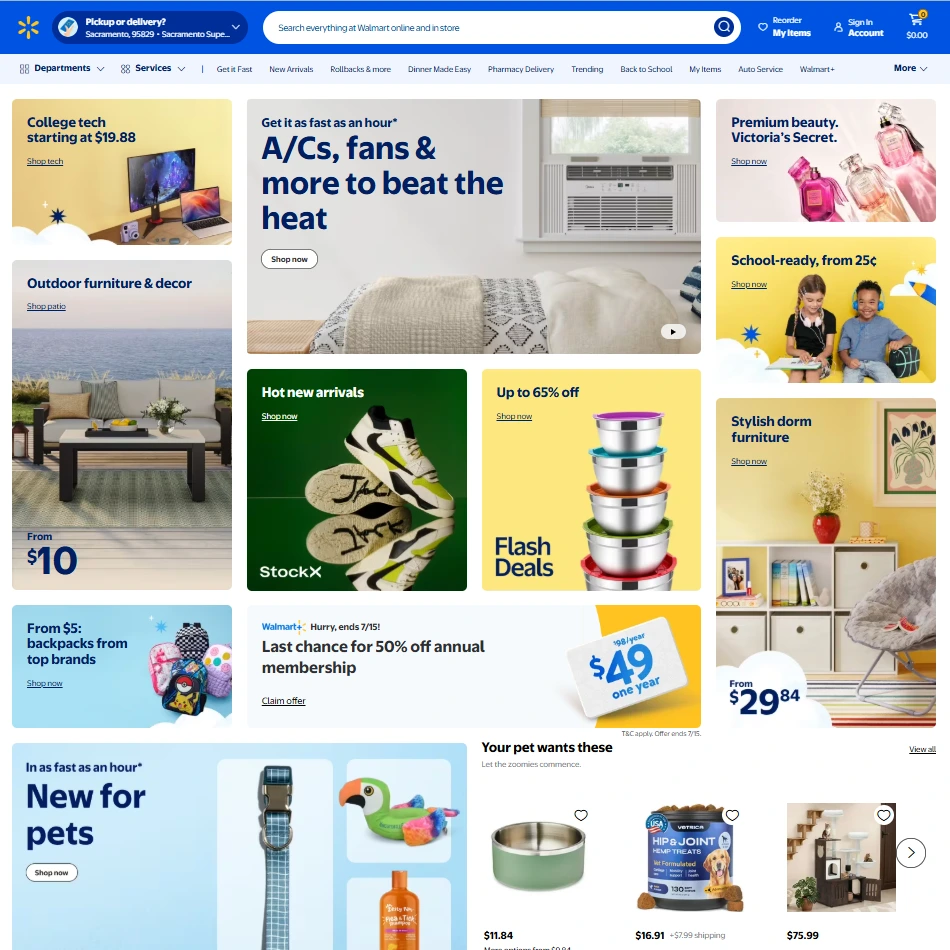

3. Walmart – Omnichannel Retail Signals from the US

Why scrape Walmart at all?

Walmart is one of the most influential retailers in the US, both online and offline. Its online catalog reflects the pricing and assortment strategies behind thousands of physical stores. Scraping Walmart allows you to see how mass-market brands are positioned in real time across categories.

The Walmart Data Scraper from ScrapeIt focuses on turning complex product and recommendation pages into structured datasets your team can use for dashboards or models.

Key data points to scrape from Walmart

- Product titles, brands, and category trees.

- List prices, rollbacks, discounts, and promotional badges.

- Shipping options, pickup availability, and estimated delivery times.

- Product images, feature lists, and long-form descriptions.

- Ratings, reviews, and “Frequently bought together” or “Similar items” blocks.

Scraping challenges & nuances

- Geolocation sensitivity. Availability and price can vary by ZIP code, which ScrapeIt handles through geo-targeted scraping in its Walmart scraper.

- Dynamic content. Recommendation blocks and comparison tables often load via APIs or JavaScript.

- Marketplace vs first-party items. It is important to capture whether Walmart or a third-party seller is offering the product.

Who uses Walmart data?

- FMCG and CPG brands – to track shelf prices and promotion mechanics.

- Retailers and marketplaces – to benchmark against Walmart’s value positioning.

- Consultants – to build US market overviews for investors and management teams.

For US-focused projects, Walmart often sits alongside Amazon in a combined e-commerce scraping setup.

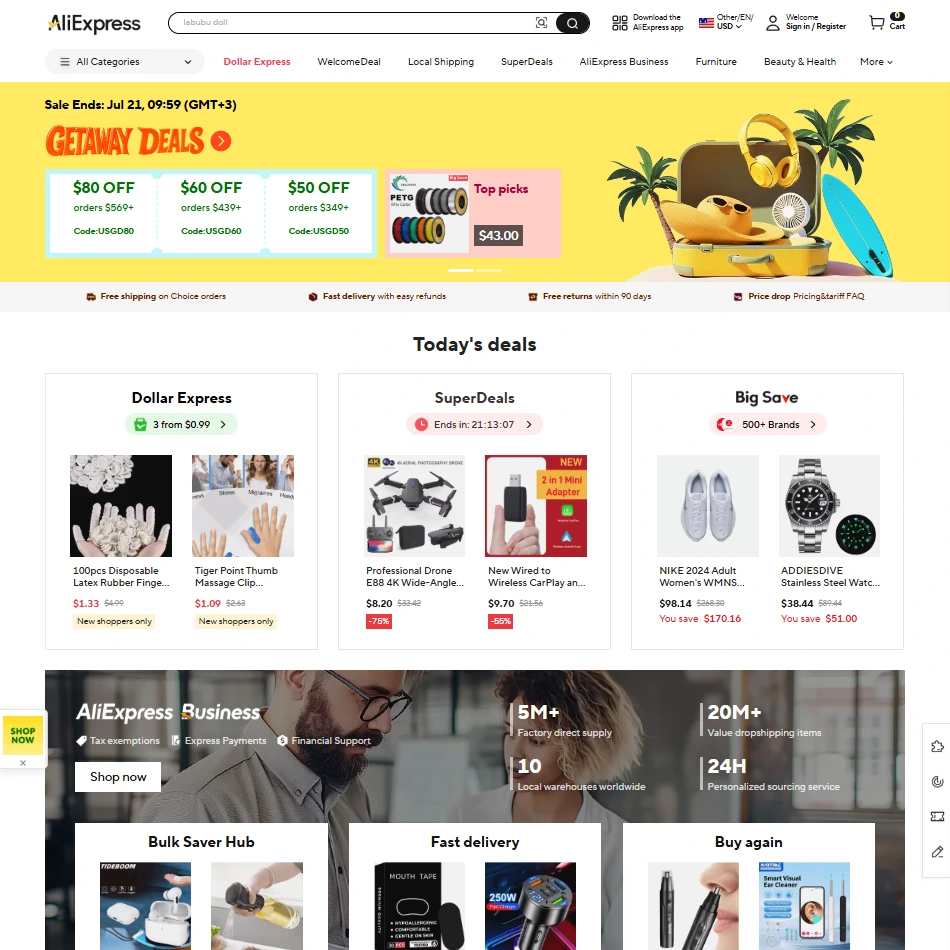

4. AliExpress – Cross-Border Demand and Product Discovery

Why AliExpress is a unique data source

AliExpress is a central marketplace for low-cost goods shipped worldwide. From a data perspective, it is where many future “winning products” first show up and gain traction before being copied or re-listed on other platforms.

ScrapeIt’s AliExpress Data Scraper helps you turn this fast-moving catalog into structured insights: which products are gaining order volume, how reviews look across countries, and where shipping remains viable.

Key data points to scrape from AliExpress

- Product titles, attributes (color, size, material), and category paths.

- Current prices, reference prices, coupon values, and tiered discounts.

- Order counts, ratings, and review text over time.

- Seller profiles, store ratings, and fulfillment options.

- Shipping methods, cost by region, and delivery estimates.

Scraping challenges & nuances

- Time-boxed promotions. Big campaigns (e.g. 11.11) massively change pricing and visibility, so schedules must be tuned accordingly.

- Region-dependent pages. Locale, currency, and language affect what appears on the page.

- High catalog turnover. Listings appear, change, and disappear quickly, which is why ScrapeIt often sets up recurring AliExpress scraping instead of one-off pulls.

Who uses AliExpress data?

- Dropshippers and online stores – to identify products with traction and validate ideas quickly.

- Marketplaces – to understand cross-border competition and price corridors.

- Data for AI teams – for large-scale product and review datasets across many categories.

AliExpress is particularly powerful when combined with Shopee and 1688.com in one e-commerce scraping project.

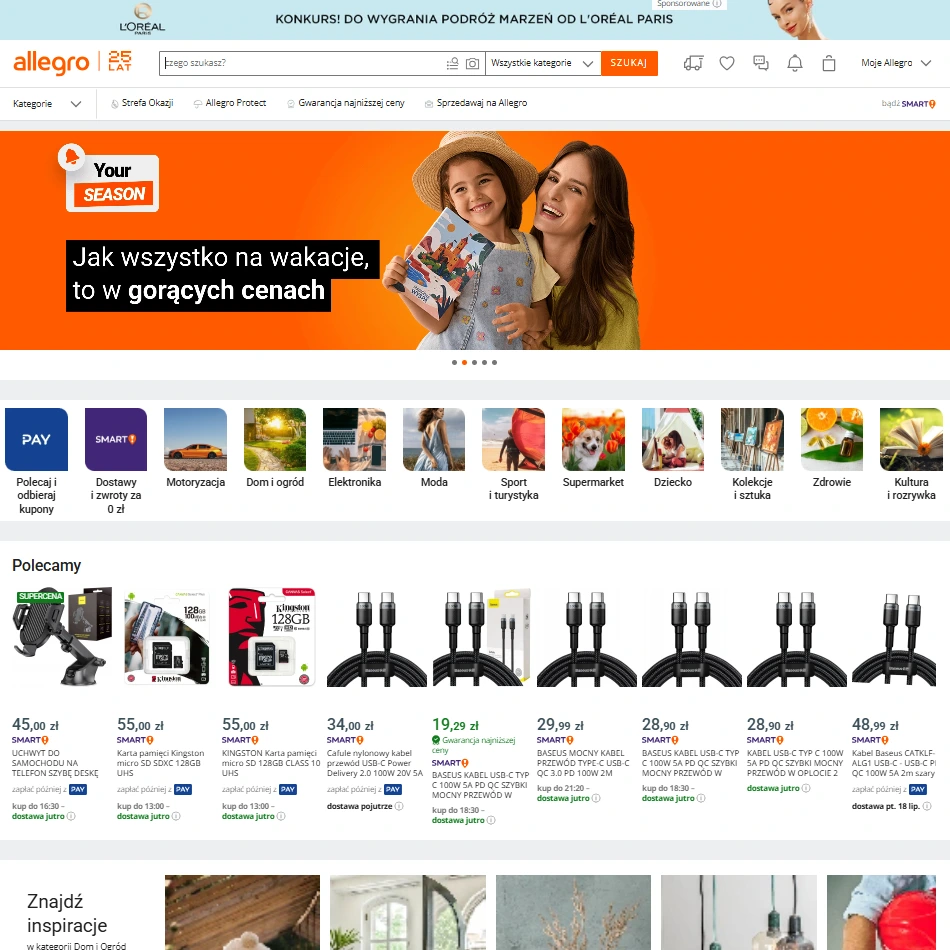

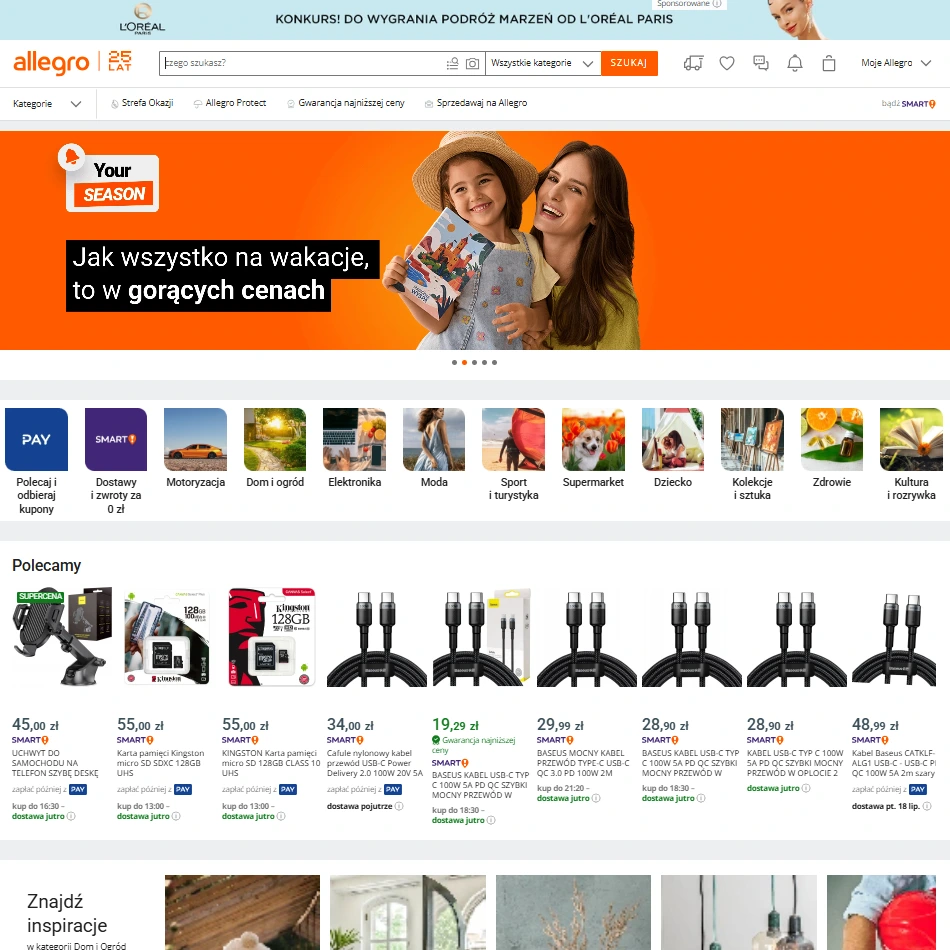

5. Allegro – The Leading Marketplace for Poland and Central Europe

Why Allegro belongs in a CEE data stack

For Poland and much of Central & Eastern Europe, Allegro is “the default marketplace” the way Amazon is in the US. If you operate in this region, scraping Allegro is essential to see real price ladders, delivery expectations, and local brand competition.

ScrapeIt’s Allegro web scraper is tuned for Polish-language pages, local currencies, and anti-bot systems, making it suitable for high-volume daily tracking.

Key data points to scrape from Allegro

- Product titles, categories, and EANs where available.

- Prices, discounts, and Allegro-specific badges (e.g. Smart!, Bestseller).

- Seller profiles, ratings, and fulfillment types.

- Delivery methods, expected timings, and local pick-up options.

- Ratings, review counts, and marketplace ads / promoted listings signals.

Scraping challenges & nuances

- Advanced bot protection. Allegro employs CAPTCHAs and traffic controls. ScrapeIt’s Allegro scraper is configured to handle this at scale with rotation and monitoring.

- EAN-based analysis. Many data projects on Allegro use EANs as a backbone, so the scraper must capture and normalize them correctly.

- Multi-country expansion. With Allegro’s activity in multiple markets, regional differentiation may be needed in your data model.

Who typically uses Allegro datasets?

- Local and regional retailers – to track price competitiveness and promotions.

- Global brands – to understand brand share and pricing in Poland and nearby markets.

- Analytics and consulting firms – to build CEE market dashboards.

If CEE is strategic for your business, Allegro is often the first source your team will add to a ScrapeIt e-commerce scraping setup, alongside eMAG.

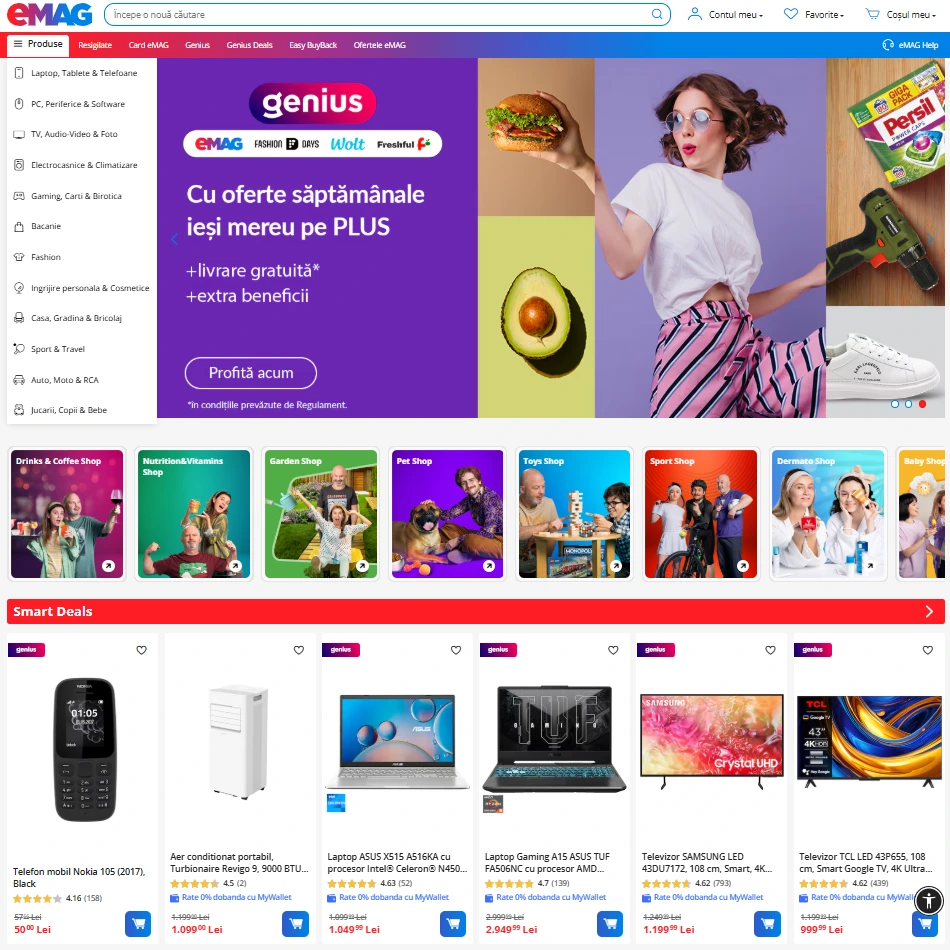

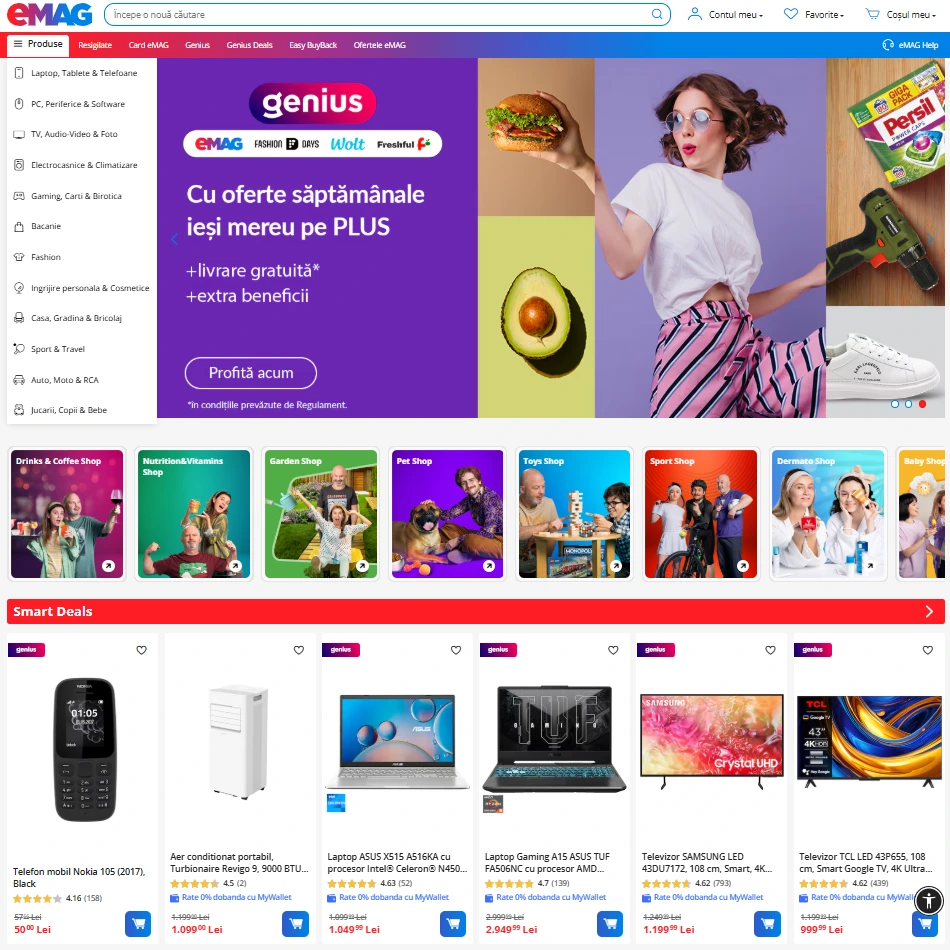

6. eMAG – Marketplace Intelligence for Romania and the Wider CEE Region

Why eMAG is important

eMAG is a dominant marketplace in Romania and a major player in surrounding markets. Its catalog and campaign structure (e.g. “Super Preț”, “WOW” deals, Genius delivery) provide a clear view on how regional e-commerce is evolving.

The eMAG data scraper from ScrapeIt is designed to capture both the product-level details and the promotional and logistics signals that matter for pricing and operations teams.

Key data points to scrape from eMAG

- Product names, brand affiliations, SKUs, and offer IDs.

- Standard prices, “Super Preț” and “WOW” deals, and Genius eligibility.

- Stock status, Easybox locker options, and cross-border shipping flags.

- Category paths, attribute filters, and brand filters.

- Ratings, review counts, and seller-specific signals.

Scraping challenges & nuances

- Multiple country sites. Romania, Hungary, and Bulgaria each use localized content and pricing. ScrapeIt’s eMAG scraper can segment and normalize these markets for you.

- Promo-heavy environment. To understand real price behavior, you need historical snapshots over weeks or months.

- Category-specific attributes. Different categories expose different specs; the scraper must adapt accordingly.

Who uses eMAG datasets?

- Retailers and brands expanding into CEE – to benchmark their offers against eMAG.

- Local marketplace sellers – to optimize listings and identify gaps.

- Research firms – to build regional reports on pricing and logistics options.

When you combine eMAG data with Allegro and ScrapeIt’s e-commerce scraping framework, you get a strong base for Central and Eastern Europe analytics.

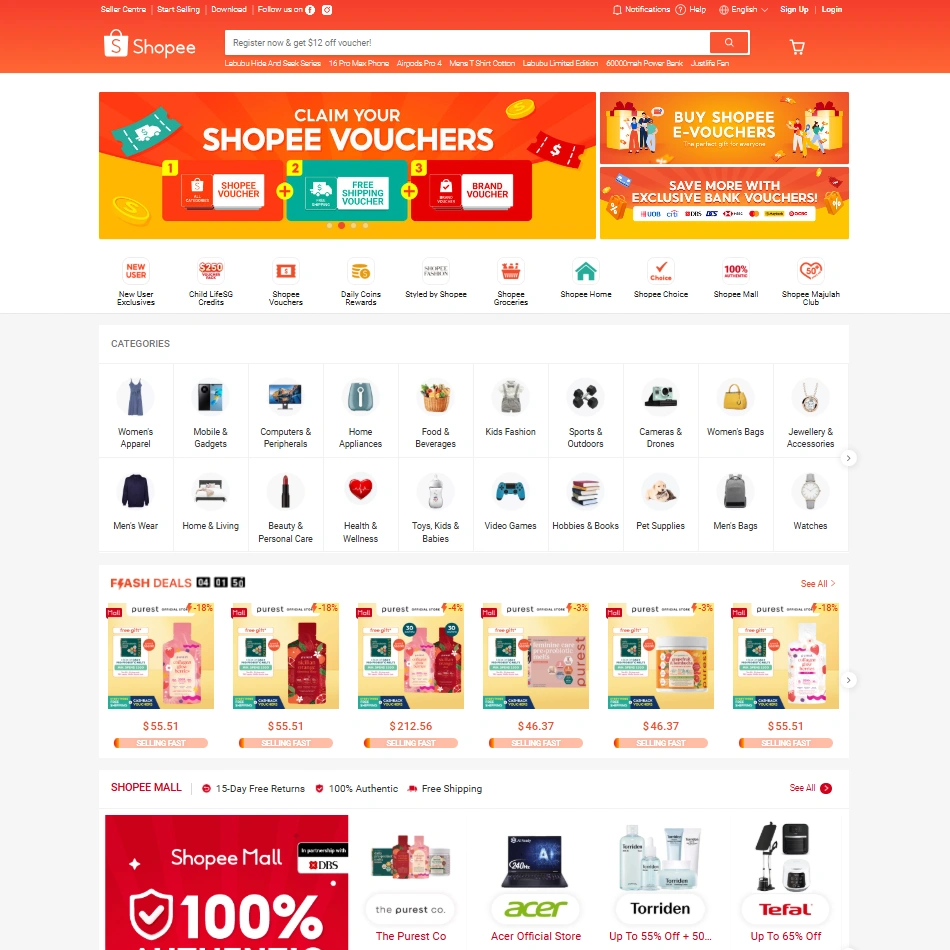

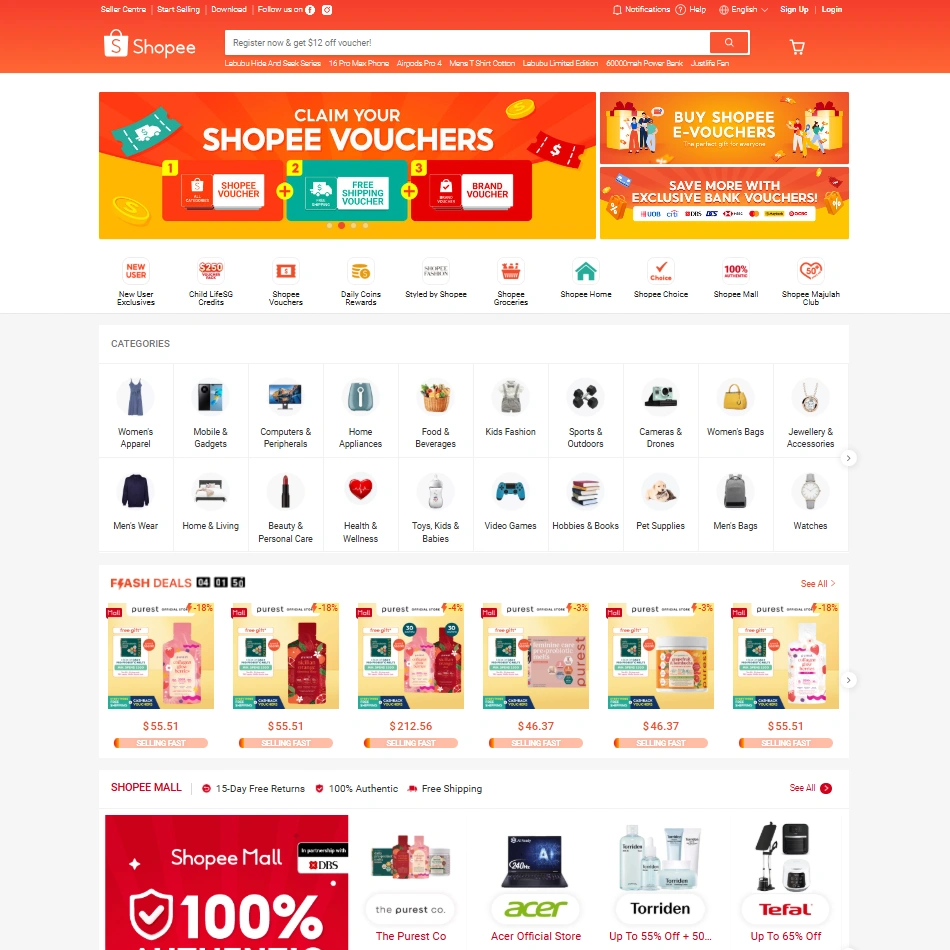

7. Shopee – Mobile-First Demand Across Southeast Asia and LATAM

Why Shopee is a goldmine for market signals

Shopee is a mobile-first marketplace that dominates e-commerce in many Southeast Asian countries and is expanding in Latin America. Its catalog, flash sales, and socially-driven mechanics offer a live view into what people buy in fast-growing markets.

ScrapeIt’s Shopee Scraper Services are built to handle multiple local domains, languages, and currencies, while keeping datasets consistent for analysis.

Key data points to scrape from Shopee

- Product titles, images, categories, and variation options.

- Prices, discounts, vouchers, and flash sale tags.

- Seller information, ratings, and shop-level stats.

- Stock levels, shipping options, and delivery estimates.

- Ratings, review content, and Q&A sections.

Scraping challenges & nuances

- Many local domains. shopee.sg, shopee.co.id, shopee.com.br and others need to be scraped and normalized separately, which ScrapeIt’s Shopee scraper supports out of the box.

- High promotion frequency. Flash sales and vouchers require more frequent data pulls if you want to capture them.

- Mobile-centric design. Some elements are optimized for apps; custom logic is needed to extract all fields from web versions.

Who uses Shopee scraping?

- Brands entering Southeast Asia / LATAM – to size demand and understand price corridors.

- Aggregators and resellers – to track best-sellers and copy winning offers into other channels.

- Market research and AI teams – for behavior and recommendation analysis in mobile-first markets.

Shopee is a natural counterpart to AliExpress in regional e-commerce data scraping projects.

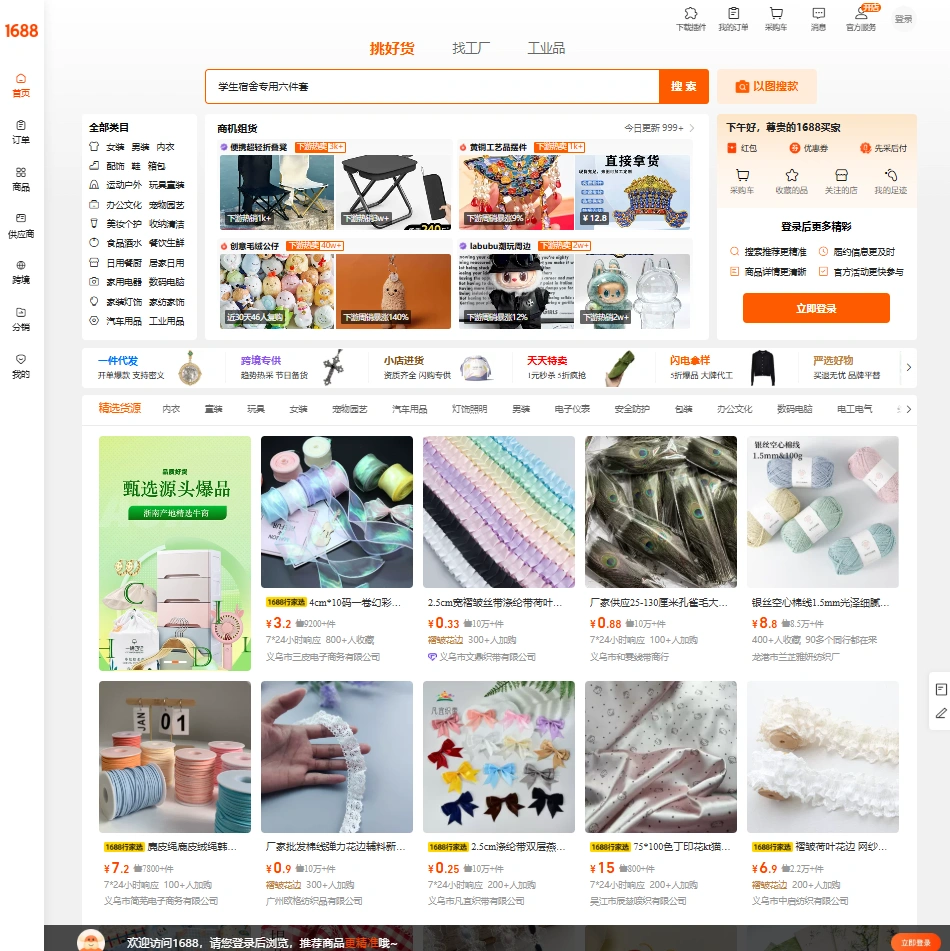

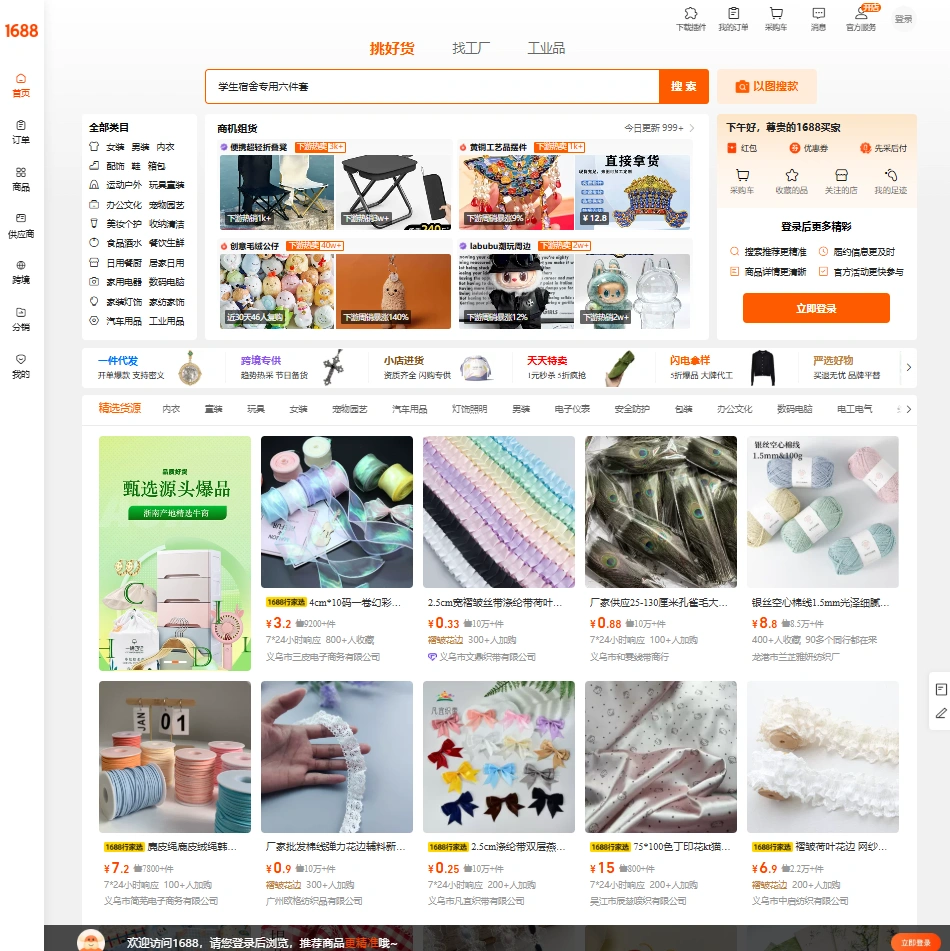

8. 1688.com – Inside China’s Domestic B2B Supply Market

Why 1688.com is essential for sourcing and supply-side analysis

1688.com is Alibaba Group’s domestic B2B platform, serving Chinese wholesalers, factories, and local retailers. Unlike export-oriented sites, it often exposes lower prices, richer specification tables, and more granular supplier data. For sourcing and private-label projects, scraping 1688 can reveal opportunities long before they appear on global marketplaces.

ScrapeIt’s 1688.com Scraper handles language, layout, and supplier-level complexity so that you receive clean product and vendor datasets instead of raw HTML.

Key data points to scrape from 1688

- Product titles, category structure, specifications, and images.

- Current and bulk pricing tiers, including discount ladders.

- Minimum order quantities and packaging details.

- Supplier names, ratings, tenure, transaction volumes, and certifications.

- Buyer reviews, repeat order indicators, and response speed statistics.

Scraping challenges & nuances

- Chinese-language pages. Both the content and structure target domestic buyers, so ScrapeIt’s 1688 scraper includes robust parsing logic for Chinese text and numeric formats.

- Rich spec tables. Products often have long specification tables that require careful mapping into a usable schema.

- Export-ready vs local-only suppliers. Part of the value is separating suppliers that can support global fulfillment from strictly local ones.

Who typically uses 1688 datasets?

- Private-label and sourcing teams – to compare suppliers and negotiate with data.

- Aggregators and importers – to track new product lines before they go mainstream.

- Market research and AI teams – to analyze manufacturing and supply trends at scale.

In many projects, 1688.com serves as the supply-side complement to demand-side data from AliExpress and Amazon, all collected via one ScrapeIt e-commerce scraping pipeline.

How ScrapeIt Handles the Hard Parts for You

Collecting data from these eight platforms sounds straightforward until you hit anti-bot walls, dynamic JavaScript layouts, CAPTCHAs, and constant small layout changes. That is why ScrapeIt works as a managed service rather than a DIY tool.

With Ecommerce Data Scraping Services, the process usually looks like this:

- You define the problem. Which sites, which fields, how often, and in what format.

- ScrapeIt designs the crawlers. Engineers configure scrapers for each target site, including anti-bot handling and data validation.

- Sample review. You receive a sample dataset to validate structure and coverage.

- Full-scale runs & maintenance. Jobs run on ScrapeIt’s infrastructure; you simply receive clean CSV, Excel, JSON, or direct integrations.

If your use case spans several platforms, ScrapeIt can also normalize attributes and categories into one schema — something that is hard to achieve with generic scraping tools.

Conclusion

You do not need to scrape everything at once. In practice, most teams start by picking one or two high-impact platforms — for example, Amazon and Walmart for US retail, or Allegro and eMAG for Central Europe — and extend from there.

If you are not sure where to start, browsing Popular Sites We Scrape will give you a sense of what is already available. When you are ready, send a short brief via the form on Ecommerce Data Scraping Services — what you want to monitor, which markets matter, and how often you need updates. ScrapeIt will handle the scraping; your team keeps the advantage.

FAQ

1. Is it legal to scrape these e-commerce websites?

ScrapeIt works only with publicly available information and does not collect login-protected content or personal data. However, how you use the data matters. Most clients rely on scraped e-commerce datasets for competitive analysis, pricing intelligence, assortment planning, and research — not for activities that would violate platform terms of use. For specific legal assessments, your own legal counsel should be involved.

2. How often should we scrape sites like Amazon, Allegro, or Shopee?

Frequency depends on your use case. For price monitoring & promos, daily or multiple times per day is typical for volatile categories. For assortment and catalog analysis, weekly or bi-weekly snapshots are usually sufficient. For market research studies, one-off or periodic exports often work well. When you scope a project with ScrapeIt, the team proposes a cadence that balances freshness, volume, and budget, using the web scraping price by industry framework to size the effort.

3. Can we combine data from several sites into one unified dataset?

Yes. A common setup is to scrape 3–5 platforms from the list above and merge them into a single schema with shared identifiers such as EANs, SKUs, or custom mappings. ScrapeIt frequently delivers unified datasets combining sources listed under Popular Sites We Scrape, so your internal team can work with one consolidated table instead of many fragmented exports.

4. What formats do you deliver the data in?

Most e-commerce clients prefer CSV or Excel for direct use in BI tools. For engineering and data science teams, JSON exports or direct integrations into data warehouses are common. Every e-commerce scraping project is configured to output in the formats and structures that best fit your existing workflow.

5. How big can an e-commerce scraping project be?

ScrapeIt regularly handles multi-million-row datasets across several platforms, delivered on a daily or weekly basis. The standard plans described on Ecommerce Data Scraping Services cover everything from one-time exports to high-frequency jobs with millions of records per month, depending on your scale and requirements.