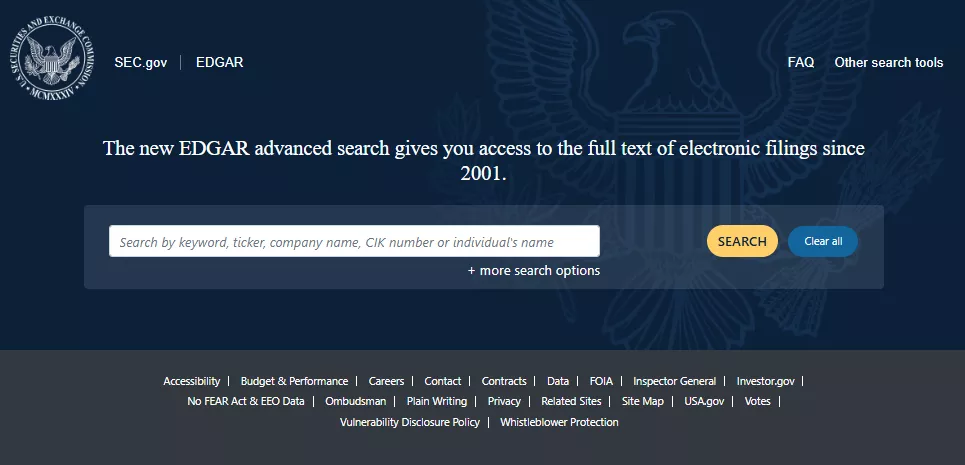

About SEC EDGAR

SEC EDGAR is the U.S. Securities and Exchange Commission’s official filing system — a public database where all publicly traded companies in the U.S. are required to submit financial disclosures, including:

- annual reports (10-K)

- quarterly earnings (10-Q)

- IPO filings (S-1)

- insider trading statements (Form 4)

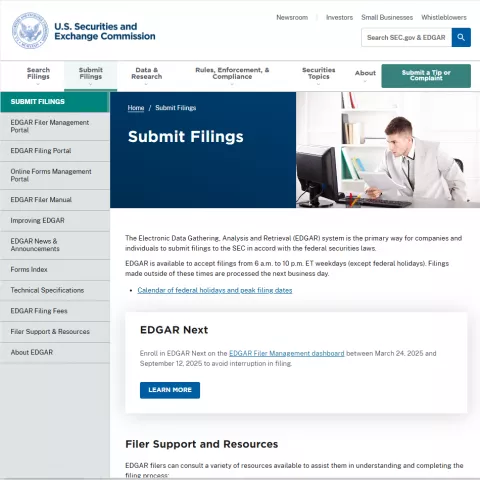

Accessible via sec.gov/edgar, it holds millions of filings, with thousands added daily — making it one of the most transparent and data-rich financial resources globally.

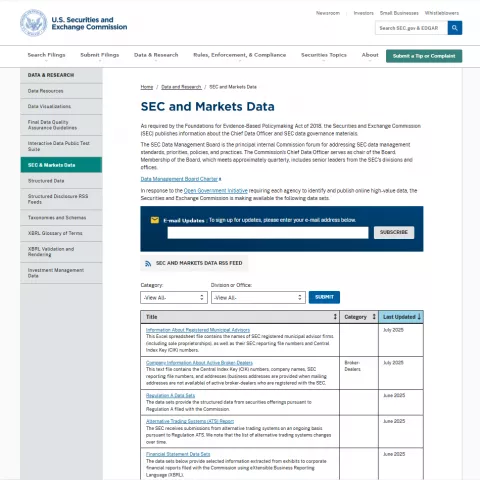

What sets EDGAR apart is its searchable, open-access structure and near-instant availability of critical information. Users can explore filings from large-cap U.S. corporations, foreign issuers, mutual funds, and even SPACs or shell companies — all formatted in regulatory language and accompanied by raw financial data that rarely appears in mainstream summaries or investor tools.

For investors, compliance officers, fintech developers, and market researchers, EDGAR offers an essential foundation for everything from equity analysis and risk assessment to machine-readable parsing of corporate events.

Online since the mid-1990s, EDGAR has become more than a document repository — it’s a core infrastructure layer for anyone seeking verified, up-to-date company intelligence in U.S. capital markets.

Get a Quote