About Wikicasa: Italy’s Tech-Driven Real Estate Network

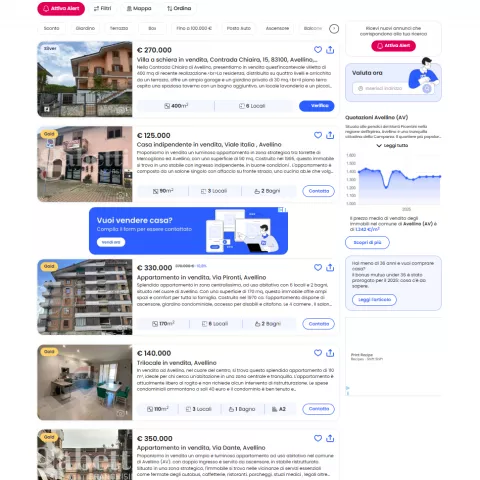

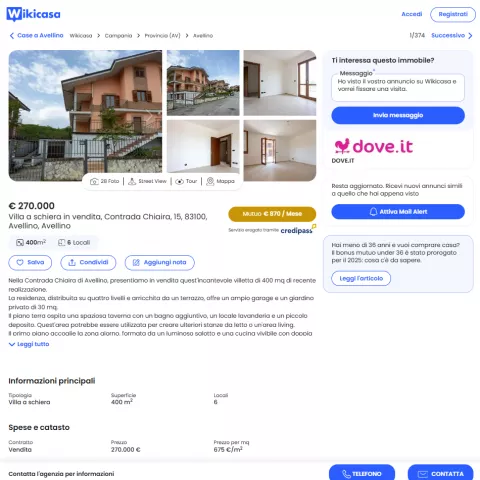

Italy’s real estate scene gets a digital upgrade with wikicasa.it, a platform built around agency-verified listings and advanced search tools. As part of the RE/MAX and Casa.it network, it hosts a wide selection of residential, commercial, and upscale properties — all listed by licensed professionals.

Wikicasa serves urban markets like Rome, Milan, and Naples, as well as provincial areas and popular seaside towns. Features like energy certificate filters, map-based browsing, mortgage calculators, and alerts make it ideal for both everyday users and investors. The site is fully in Italian, with a mobile-optimized interface and an app for on-the-go property tracking.

More than just listings, Wikicasa delivers insights: property price trends, new-build spotlights, and neighborhood-level reports give users the context they need to buy, rent, or evaluate the market intelligently.

Get a Quote