Real estate teams work in a fragmented data landscape. The sites that drive demand in Boston look nothing like the ones that matter in Berlin, São Paulo, Dubai, or Mumbai. If you are a portal, agency, investor, bank, or research team, you cannot just “scrape some real estate data” — you need a structured, regional playbook of which portals to watch and how to collect their data safely at scale.

This guide maps out the real estate portals that ScrapeIt most often scrapes for clients across North America, Europe, LATAM, MENA, Africa, and Asia–Pacific, and explains what each site is good for, who typically uses it, and what to watch out for when collecting data. It is designed to sit alongside the core real estate scraping services and the catalog of real estate sites that we scrape, and to help decision-makers choose the right mix of portals per region rather than focusing on one “global top 5.”

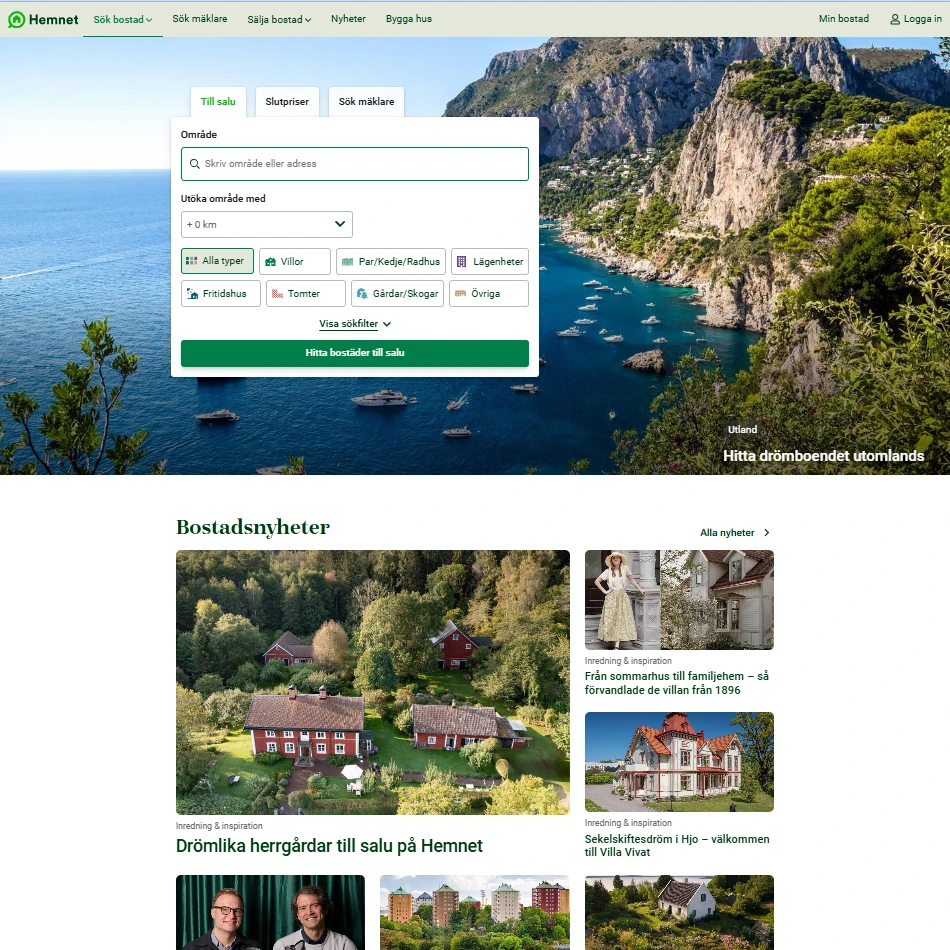

In the companion article “7 Real Estate Websites to Scrape in 2026: Plus 2 Hidden Gems”, ScrapeIt looks at cross-market leaders like Zillow, Rightmove, Realtor.com, ImmoScout24, and Hemnet. This piece takes a different angle: it assumes you already know the big names and now need to build a regional, portfolio-level scraping strategy.

Across the priority segments we see the same questions repeat:

“Once we had daily snapshots from the right mix of U.S., EU, and LATAM portals, portfolio reviews stopped being a ‘we think’ conversation and became a dashboard question.”

— Data lead, cross-border residential fund

Below, you will find the portals ScrapeIt most commonly works with for real estate clients, grouped by region and use case, with links to the corresponding managed scrapers and real-world case studies.

Think of this article as a design document for your data pipeline:

If you need a more general overview of how web scraping fits into real estate strategy (agent competitiveness, market analytics, etc.), you can pair this with the explainer article “How to Use Real Estate Web Scraping to Gain Valuable Insights.”

The U.S. and Canada are dominated by a small set of consumer brands, but each platform plays a different role in the data stack. For most clients, the “core” U.S. bundle is some combination of Zillow, Realtor.com, Redfin, Trulia, and Apartments.com, plus a niche source for sold or off-market inventory, and a dedicated feed for Canada.

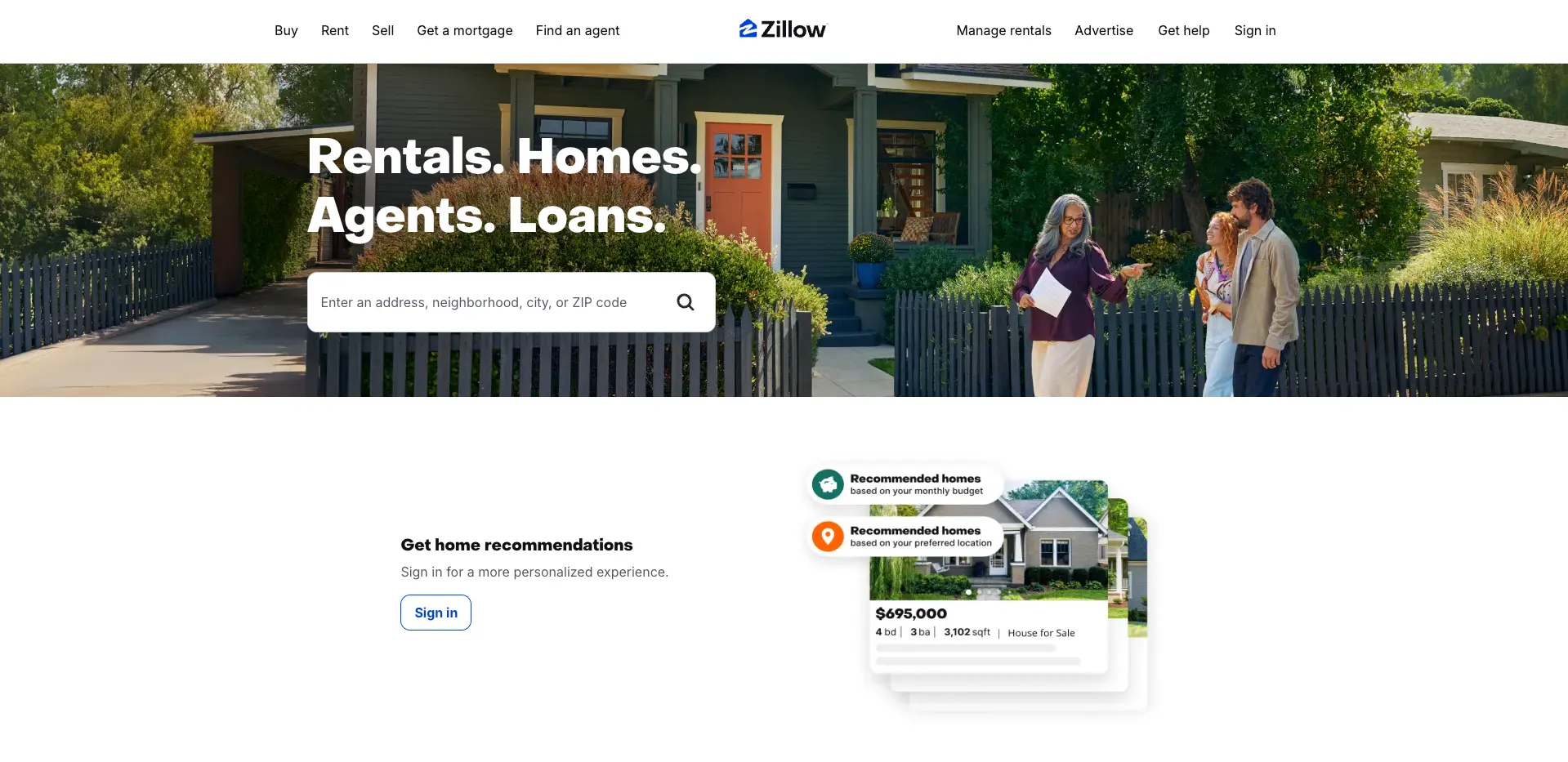

Zillow is usually the first name on any U.S. data roadmap: massive coverage, rich historical pricing, and consumer engagement metrics that help investors and portals see which properties and neighborhoods are “hot.”

Typical use cases:

Scraping & data nuances:

For a concrete example, see ScrapeIt’s case study on daily Zillow scraping for Massachusetts listings, where the team captures all sale and rental properties in a single state-level dataset refreshed every morning.

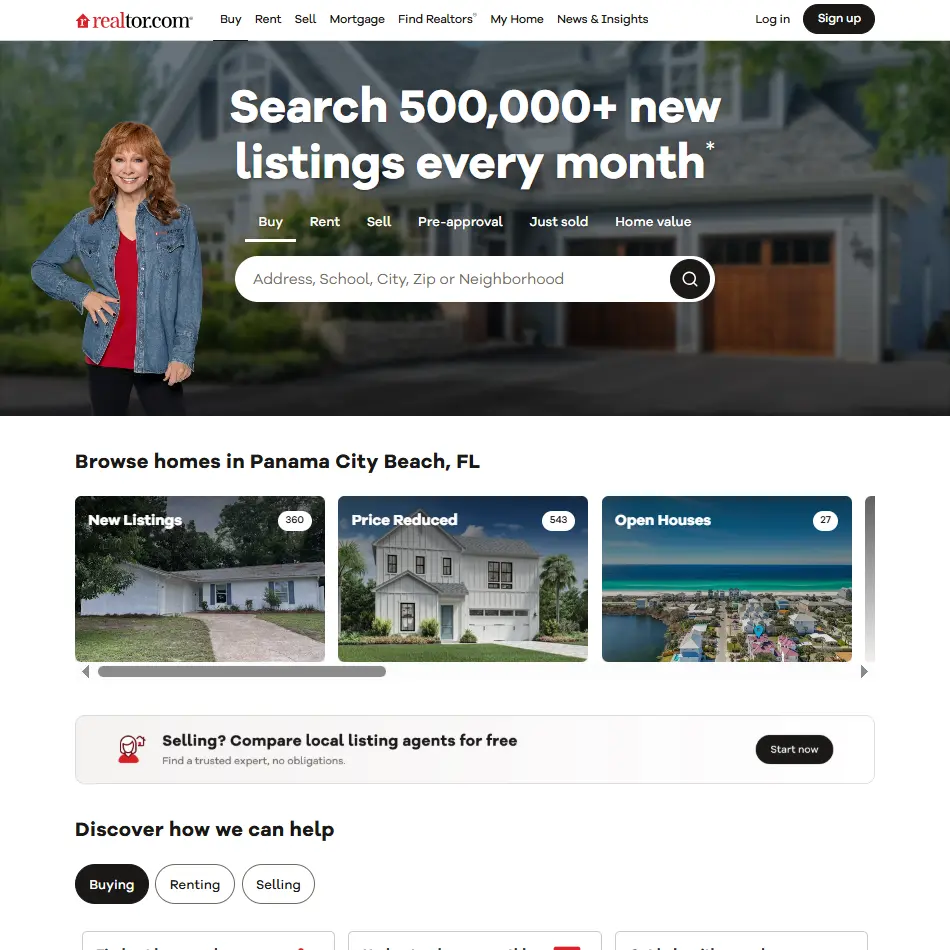

Realtor.com is tightly linked to MLS feeds and is especially strong for status accuracy — “under contract,” “pending,” or “sold” markers tend to be more consistent than on some other portals.

Best for:

Scraping & data nuances:

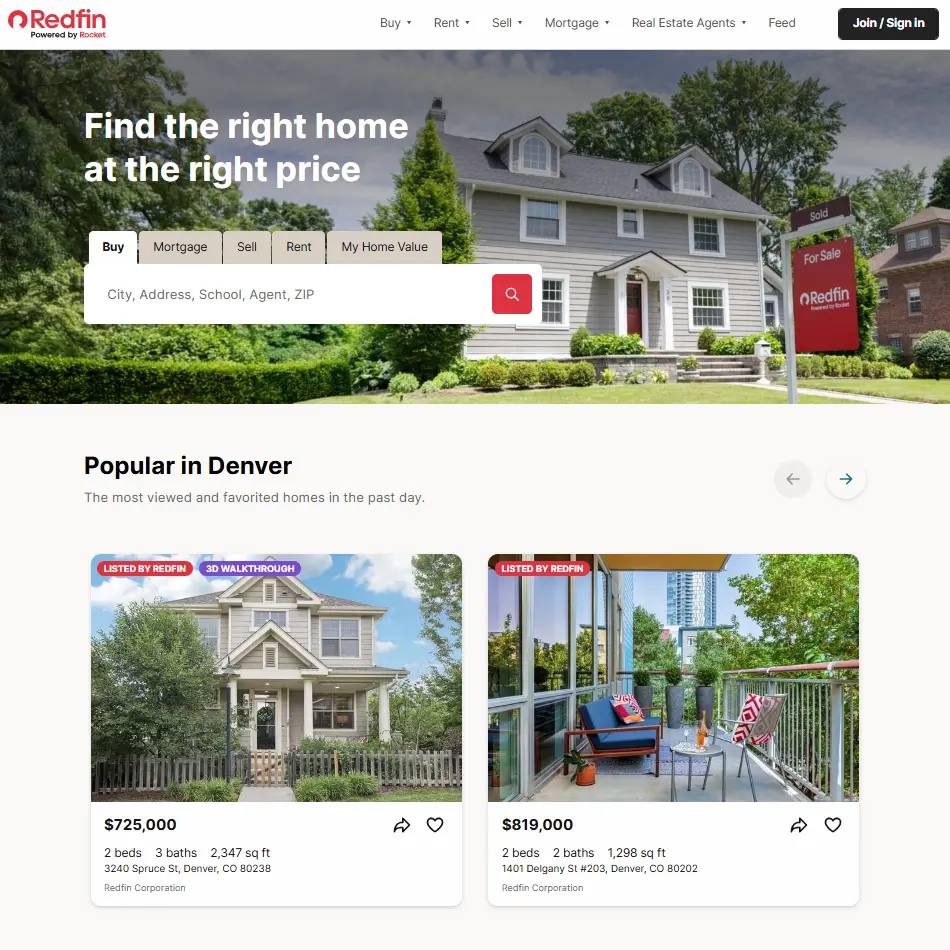

Redfin is a favorite among data teams because its listings often expose granular price histories, open house data, and indicators of listing “heat” (views, favorites).

Best for:

Scraping & data nuances:

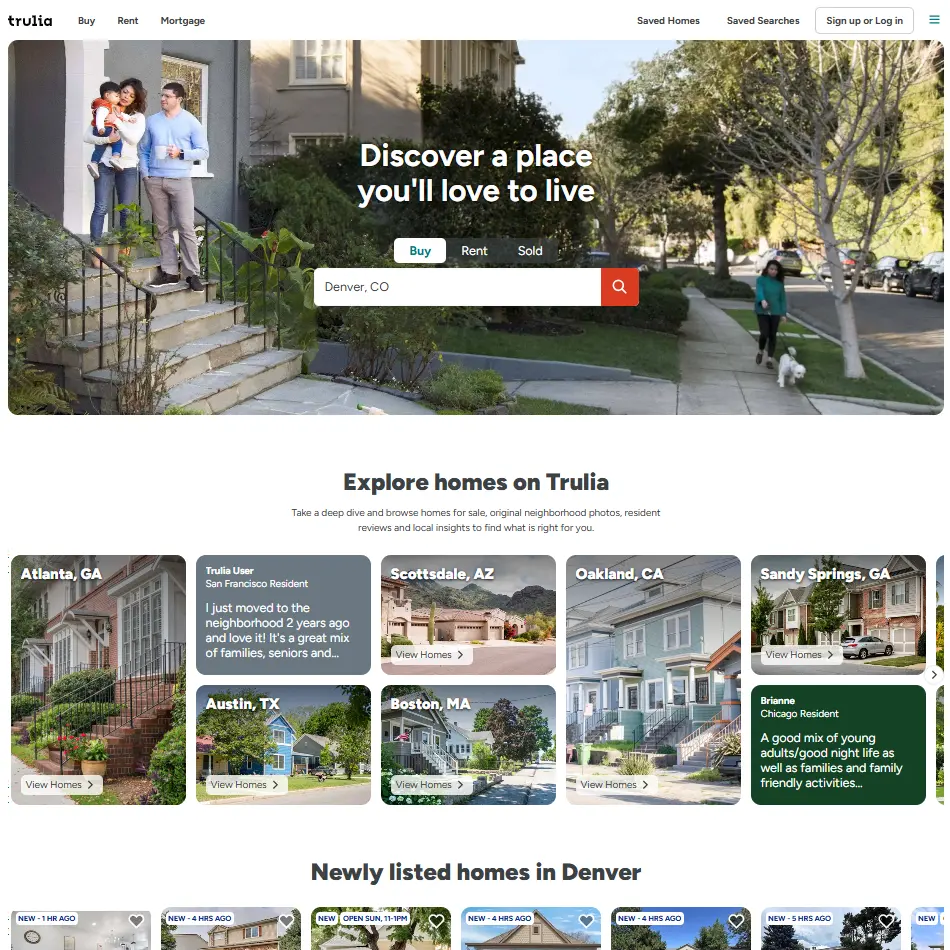

Trulia focuses heavily on neighborhood context — crime maps, school ratings, commute times, and user reviews — which makes it an excellent complement to Zillow and Realtor.com when you want to understand “how people live,” not just where properties are listed.

Best for:

Scraping & data nuances:

Apartments.com is often the backbone for multi-family and rental-only analysis in the U.S. — especially for operators tracking rents across portfolios and markets.

Best for:

Scraping & data nuances:

For Canada, clients often mix REALTOR.ca and niche players like Zolo. ScrapeIt’s case study on Zolo real estate data scraping shows how a client receives a daily dataset of “sold” listings, including full property and agent details, to power analytics around closed deals.

Key nuance: some Canadian portals restrict “sold” sections to logged-in users; your scraping solution must manage authenticated sessions and respect local compliance rules around personal data.

“Having yesterday’s sold deals in my inbox every morning changed how I talk to our sales team. We stopped guessing and started coaching.”

— Head of sales, brokerage network in Canada

Unlike the U.S., Europe is a patchwork of national champions. ScrapeIt’s Real Estate Sites That We Scrape list and the EU-focused case studies show how most serious projects involve several portals per country plus a strong normalization layer.

Rightmove is the primary residential portal in the UK and a must-have for anyone doing pricing, inventory, or agent-performance analysis in England, Scotland, or Wales.

Best for:

Scraping & data nuances:

ImmoScout24 is a key data source across Germany and, depending on your configuration, other DACH markets. It offers strong coverage of both residential and commercial assets.

Best for:

Scraping & data nuances:

Funda dominates the Dutch residential market and frequently appears in ScrapeIt’s EU projects. In the case study on 230,000 daily rows across five EU property sites, Funda leads a bundle that also includes portals like Pararius, Rentola, and Zimmo for Belgium.

Best for:

Scraping & data nuances:

Some portals are small on a global scale but absolutely central locally. Among those ScrapeIt frequently supports are:

Scraping & data nuances: EU sites are often “easier” technically than some U.S. portals (lighter anti-bot), but cross-language, cross-currency, and cross-regulation differences mean normalization and translation are critical parts of the job.

“We didn’t realize how much work was hidden in just ‘making five EU sites look like one dataset’ until we saw the 230k-row daily export.”

— Product owner, European housing portal

In LATAM, activity is split across several strong local portals rather than one dominant player. ScrapeIt maintains dedicated scrapers for multiple Spanish- and Portuguese-language platforms that together give robust coverage of Mexico, Brazil, Colombia, Peru, and neighboring markets.

Inmuebles24 is a key portal for Mexico, widely used by both agencies and private sellers.

Best for:

Scraping & data nuances: You need solid address normalization (colonias, barrios, neighborhood nicknames) and currency handling for MXN vs. USD in coastal or luxury submarkets.

VivaReal (now part of Grupo ZAP) is a cornerstone of Brazilian online real estate.

Best for:

Scraping & data nuances: Expect Portuguese-language fields and rich amenity descriptions; careful text normalization is needed if you are aggregating cross-country datasets.

AdondeVivir is a leading portal in Peru, while Metrocuadrado plays a similar role in Colombia.

Typical LATAM patterns ScrapeIt handles:

For the MENA region and parts of Africa, ScrapeIt commonly works with a cluster of portals that together cover the Gulf, Turkey, and key African markets.

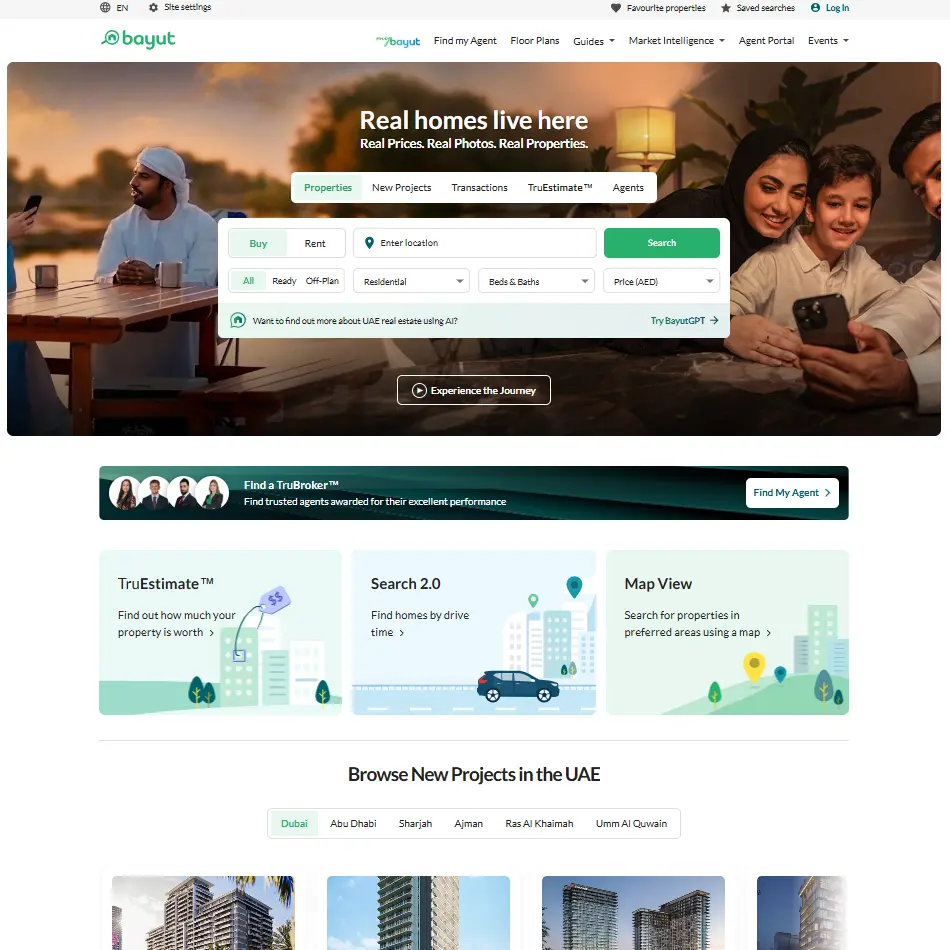

Bayut and Dubizzle together provide deep coverage of residential and commercial listings across the UAE and, through associated brands, neighboring GCC markets.

Best for:

Scraping & data nuances:

Sahibinden is one of the most frequently requested Turkish sites across several ScrapeIt projects, covering not just property but also vehicles and classifieds.

Best for:

Scraping & data nuances: Heavy use of Turkish-language abbreviations and mixed-script listings means you need good text standardization to avoid duplicate or mis-classified assets.

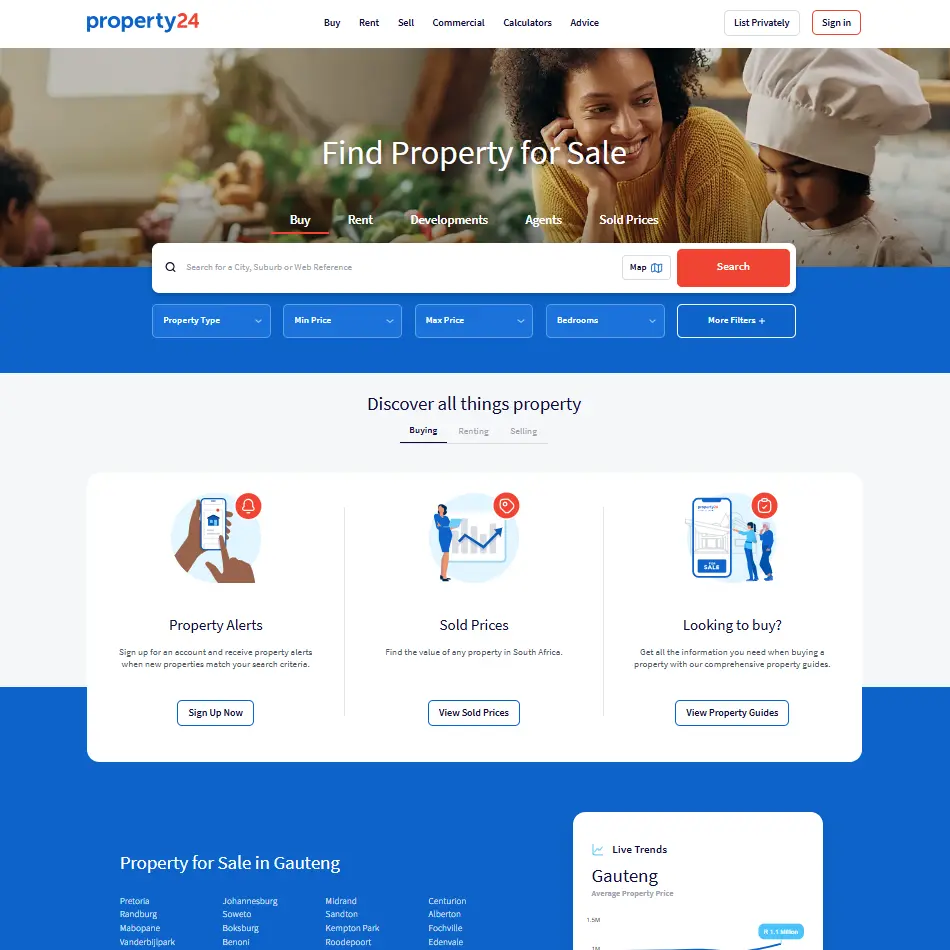

In Africa, Property24 is a critical portal, particularly in South Africa and several neighboring markets.

Best for:

Scraping & data nuances: Address structures can be inconsistent; ScrapeIt typically includes address cleaning and geo-enrichment to make the data usable for modeling and mapping.

Asia–Pacific is structurally different: very dense markets, high online activity, and portals that blend classical listings with heavy filter logic, map views, and promotional placements. ScrapeIt maintains several India- and APAC-focused scrapers.

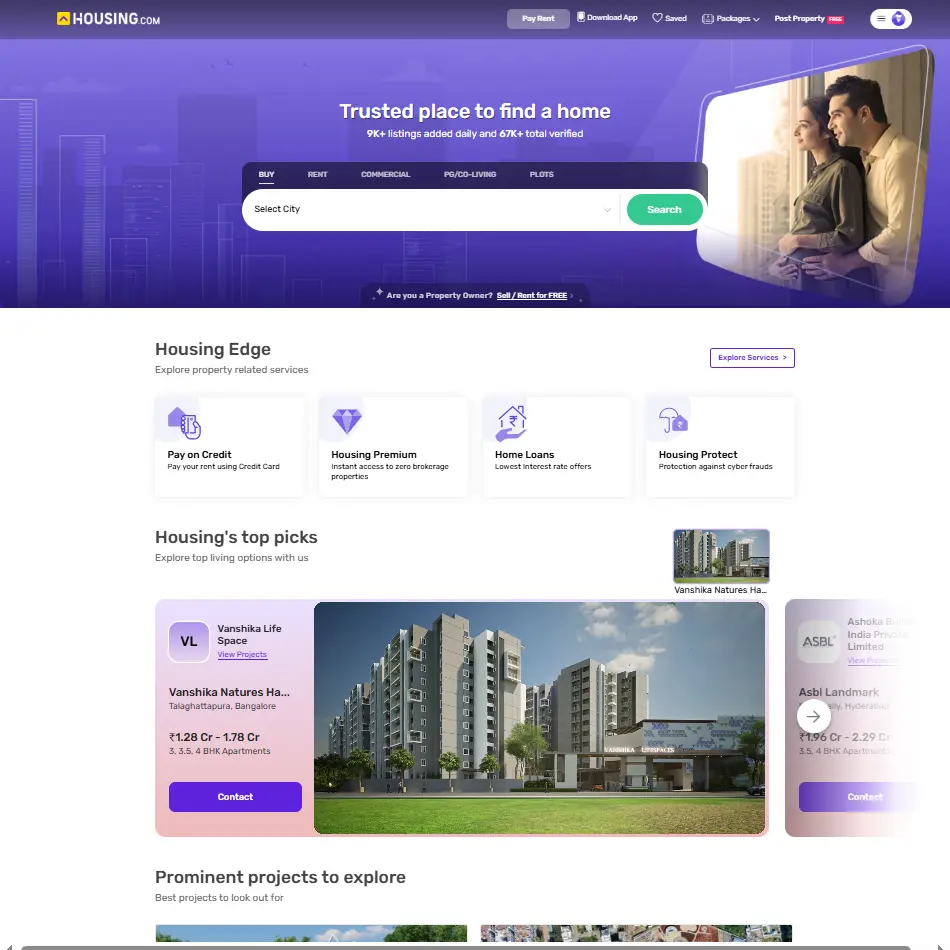

India is usually covered with a three-portal core: Housing.com, 99acres, and Magicbricks.

Best for:

Scraping & data nuances:

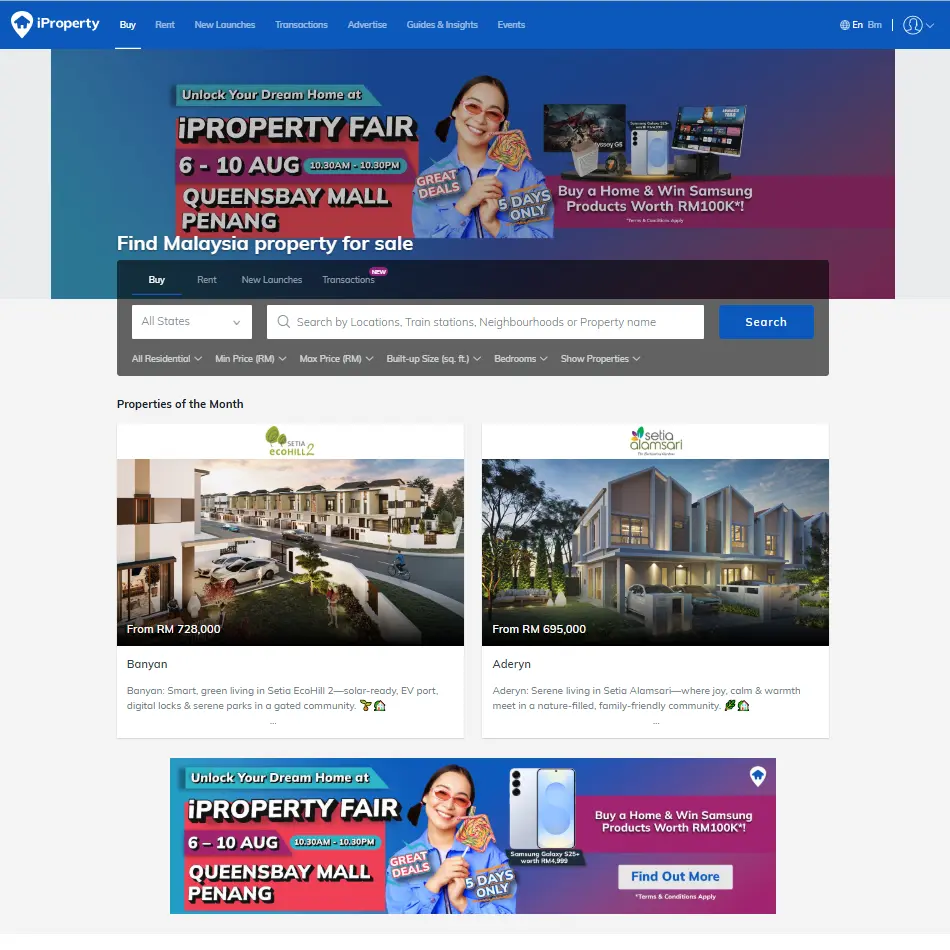

For Southeast Asia, ScrapeIt often works with: iProperty (Malaysia and surrounding markets) and Lamudi (active in several emerging Asian and LATAM markets).

Scraping & data nuances:

Beyond big portals, many of the highest-ROI scraping projects in real estate focus on niche or “hidden gem” sites: FSBO boards, auction sites, local classifieds. ScrapeIt keeps a set of scrapers specifically for these use cases.

FSBO.com (For Sale By Owner) is a classic example of an owner-listing hub that agencies, investors, and iBuyers monitor aggressively.

Best for:

Scraping & data nuances: Volume per day is smaller than on big portals, but lead value per row is higher. Filtering out non-serious listings and duplicates is important to avoid wasting sales time.

In many regions, the challenge is not just scraping the portal, but classifying who is really behind each listing. The ScrapeIt case study on data scraping for the real estate industry shows how a client receives 85,000+ rows per day filtered down to “true private” sellers to fuel an agency’s outbound funnel.

“If our call is not in the first three calls a private seller receives, the lead is already gone. Automated scraping is the only way to consistently be in that top three.”

— Sales manager, national agency network

The concrete portals you choose will depend on your role and region, but most successful ScrapeIt projects follow a similar structure:

If you are planning a regional real estate data pipeline, three next steps are usually enough to get moving:

Done well, regional real estate web scraping does not just give you more data. It gives you a synchronized, multi-country view of pricing, supply, and demand — the kind of view that lets portals grow faster, agencies win more mandates, and investors move before the market catches up.

1. Why is a regional approach to real estate web scraping more effective than focusing on a "Global Top 5" list?

A regional approach is necessary because the key property portals, demand drivers, and data nuances vary significantly by geography (e.g., Boston vs. Berlin). A successful strategy requires a structured, regional playbook that identifies the local champions and normalizes country-specific fields (like statuses, pricing, and regulations) across multiple sites, which a simple "Global Top 5" list cannot achieve.

2. What are the core property portals recommended for the North American market (U.S. & Canada)?

The core U.S. bundle typically includes Zillow, Realtor.com, Redfin, Trulia, and Apartments.com. For Canada, key sources like Zolo.ca and REALTOR.ca are often used, with a focus on capturing "sold" listing data where available.

3. How does the European real estate data landscape differ from the U.S. market?

Europe is characterized by a patchwork of strong national champions (e.g., Rightmove in the UK, ImmoScout24 in Germany, Funda in the Netherlands), whereas the U.S. is dominated by a few major consumer brands. European scraping projects require aggregating data from several portals per country, demanding a strong layer of normalization for cross-language, cross-currency, and local regulation-specific fields.

4. Besides the large, general portals, what are some high-value niche sites that real estate teams should monitor?

High-value niche sources often include "For Sale By Owner" (FSBO) hubs like FSBO.com, or portals focused on owner-listed properties like NoBroker (India). These sites provide earlier access to motivated sellers, off-market inventory, or distressed assets, offering a crucial edge for lead generation and iBuyers.

5. What is the final deliverable and key benefit of using a managed regional scraping service like ScrapeIt?

The key benefit is receiving a single, synchronized, multi-country view of pricing, supply, and demand delivered as a ready-to-use dataset, not just raw scraping tools. The deliverable is a standardized, normalized schema across all chosen portals (e.g., Zillow + Rightmove + ImmoScout24 are mapped to one logical format), allowing BI, pricing, and AI teams to work with one clean data source.

Let us take your work with data to the next level and outrank your competitors.

1. Make a request

You tell us which website(s) to scrape, what data to capture, how often to repeat etc.

2. Analysis

An expert analyzes the specs and proposes a lowest cost solution that fits your budget.

3. Work in progress

We configure, deploy and maintain jobs in our cloud to extract data with highest quality. Then we sample the data and send it to you for review.

4. You check the sample

If you are satisfied with the quality of the dataset sample, we finish the data collection and send you the final result.

Scrapeit Sp. z o.o.

10/208 Legionowa str., 15-099, Bialystok, Poland

NIP: 5423457175

REGON: 523384582